You have /5 articles left.

Sign up for a free account or log in.

Most science is collaborative on some level, and an increasing number of colleges and universities and other kinds of institutions are investing in large-scale group science efforts. But are bigger teams really better? A new paper suggests that, when it comes to team science, teams shouldn't get too big, but they should be multinational to have a bigger impact.

Growing “team sizes, increasing interdisciplinarity and intensifying ties across institutional and geographic borders demonstrate how scientific research has evolved from a solitary enterprise to an expanding social movement,” argues “Multinational Teams and Diseconomies of Scale in Collaborative Research,” in the current Science Advances. “However, not all large-scale projects have led to the expected paradigm shift or breakthrough in knowledge.”

The study’s authors are quick to point out that not all large team efforts can be expected to make scientific breakthroughs. And indeed in some cases, large teams have done paradigm-shifting work, such as the discovery of the Higgs boson or the massive feat of DNA sequencing. Rather, the authors say, “The growing prominence of very large research teams despite their associations with diminishing returns of citation impact suggests the impracticalities of science being indiscriminately conducted at the expense of smaller and possibly more efficient teams.”

Such results may be “particularly relevant to the growing needs for research accountability and cost-effective practices,” the paper says.

For their study, lead author David Hsiehchen, a clinical medical fellow at Harvard University, and his team of two co-investigators compared the citation rates of 24 million life and physical sciences research papers to the characteristics of the teams that produced them. The idea was to see if any trends emerged -- which they did, in terms of size and number of different nationalities represented.

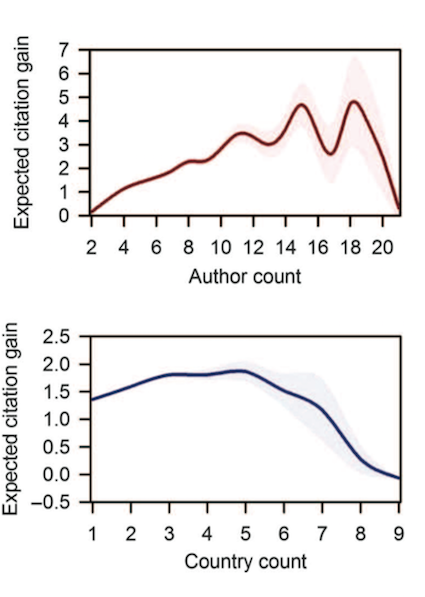

Over all, a paper’s citation rate per capita decreases as the number of authors in a large team goes beyond a certain number -- around 20. And while 20 may seem large in nonscience fields, plenty of science papers far exceed that.

The impact factor as determined by the citation rate, however, grows with the number of countries represented within the research team, until about seven or eight countries, after which only incremental benefits are observed.

The papers in question, all of which represent primary research, were published within the last four decades and are included in the Thomson Reuters Web of Science database for natural, social and applied sciences.

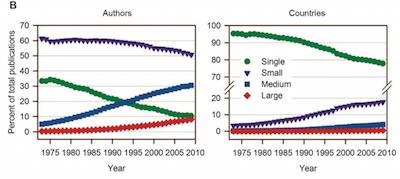

Considering team size, the authors note that grouping publications by number of authors demonstrates the “waning dominance” of small, two- to four-person teams, and a rapid decline in the proportion of single-authored works over the last 40 years. Medium-team research -- five to eight authors -- meanwhile, increased over the same period, as did research by even bigger teams.

The preponderance of single-country research also diminished, while the proportion of small-country teams (two countries represented) increased. Medium-country (three or four) teams and large-country (more than four) marginally increased.

Increases are in proportion, not absolute numbers, since the number of all kinds of research grew since the 1970s. For example, large-author team output grew from 667 papers to 87,525 papers from 1973-2009, while single-author teams grew from 73,035 to 110,785 papers over the same period. Similarly, large-country teams grew from 28 to 5,507 papers, and small-country team output grew from 208,917 to 825,956. (Larger-country team output growth was exponential in almost all research fields.)

In their main analysis comparing citation impact to team characteristics, Hsiehchen and his team found that a multinational presence was coupled with a decreased likelihood of not being cited, and an increased probability of being among the most cited papers.

Meanwhile, the disassociation between author numbers and citations was apparent when team sizes grew beyond 20 members in all subject fields, the paper says. “This would be consistent with diseconomies of scale observed in many human endeavors with marginal costs increasing once firms or organizations surpass an optimal mass.”

Hsiehchen and his team tested their hypothesis using generalized linear models and found that top-ranked papers by team size (the top 1 percent), showed a “reduced or even deleterious effect of additional authors” across the years. An expanded analysis of more than 10 million papers published over a decade ago also showed a decreased or inhibitory effect of author numbers on citation gains across different definitions of large team sizes, after adjusting for year of publication and subject field.

The citation gain related to national affiliations was largely preserved for large-team papers. But that benefit peaks at 18 individuals, before a sharp decline.

Hsiehchen and his team also found a significant citation advantage for biomedical research in particular when there is a foreign (relative to the first author’s country) researcher in the second-to-last author position.

“We surmised that the second-to-last author position in international team papers likely denotes a significant contribution by a foreign [principal investigator] in the provision of management, resources, skills or creative input but not to the extent of the primary team leader or investigators,” the paper says.

The paper acknowledges certain vulnerabilities, primarily that citation metrics don’t necessarily indicate research quality. But it says its findings are significant nonetheless in that very large collaborative science projects may not return results at the scale of investment.

Hsiehchen said via email that he became interested in studying research collaborations and outcomes “because there is little accountability in the way much of science is currently being conducted and promoted. In medicine, often our goal is to apply ‘evidence-based practices’ that are supported by rigorous clinical trials, systematic reviews and other comprehensive analyses. This is crucial to the advancement of medicine and patients as it often means that clinical researchers are invalidating common assumptions, investigating anecdotal evidence and discovering clinically important paradoxes.”

He added, “I believe that we should also apply ‘evidence-based practices’ to the scientific process itself to improve accountability, efficiency and transparency.”

Summing up his research, Hsiehchen said it suggests there’s a “threshold effect” when it comes to collaborative science.

“In essence, bigger is not better,” he said. “We applied the term ‘diseconomies of scale’ to help clarify the mechanism of this effect, which is often used to describe how large companies or firms lose efficiency and produce products at additional cost.”

Reasons for that include ineffective communication, redundant efforts, inefficient organization and increased costly bureaucracy within companies and research teams alike, he said. “There is also the opportunity cost that individual researchers incur by being involved in a team when they may perform better alone or be better invested in other projects.”

While Hsiehchen said his work indicates that not all collaborations are equal, and that team size may be one of the major constraints to developing more creative, quality work, a lot more research needs to be conducted “to assess individual contributions in research teams to understand the diseconomies of scale we observed in citation rates, and to know how we can make collaborations a more efficient process.”

Filippo Radicchi, an assistant professor of informatics and computing at Indiana University, has studied impact factors in science research, across fields. He called Hsiehchen’s paper “very interesting,” in that -- to his knowledge -- it represents the first investigation of multinational collaborations in science based on large-scale bibliographic data.

Radicchi said one weakness of the study, meanwhile, was that it didn’t name the countries involved in multinational research, to see if the results could be extended to countries with much smaller research outputs than, say, the U.S. and Great Britain.

Furthermore, he said, “the same data of the current analysis can be used in the future to study the scientific collaboration network among countries.” What does the research network look like, for example? How did it evolve over time? And what countries play a central role in multicountry collaboration?