You have /5 articles left.

Sign up for a free account or log in.

Istockphoto.com/J. L. Gutierrez

When we think about academic misconduct, we tend to think about misrepresentation of research findings or plagiarism. But a new study says that misrepresentation of academic achievements on CVs is a problem requiring attention, too.

For their experiment, the researchers collected each and every curriculum vitae submitted for all faculty positions at a large, purposely unnamed research university over the course of a year. Then they let the CVs sit for 18 to 30 months to allow any pending articles to mature into publications that they could verify.

To make the data set manageable, the researchers eventually analyzed 10 percent of the sample for accuracy. Of the 180 CVs reviewed, 141, or 78 percent, claimed to have at least one publication. But 79 of those 141 applicants (56 percent) had at least one publication on their CV that was unverifiable or inaccurate in a self-promoting way, such as misrepresenting authorship order.

Trisha Phillips, the paper’s lead author and an associate professor of political science at West Virginia University who studies research ethics, said Wednesday that it’s impossible to tell based on the study’s methodology whether the faculty applicants intended to mislead hiring committees or whether they were making honest mistakes. But she pointed out that the analysis identified just 27 errors that were not self-promoting, such as demoting oneself in authorship order. That’s compared to 193 errors that were self-promoting.

The imbalance or disproportionality is “pretty startling,” Phillips said.

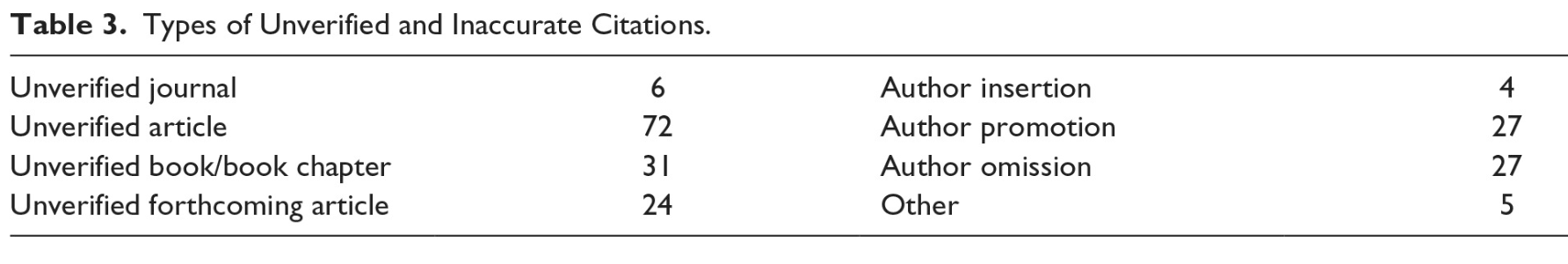

The 193 instances of unverified or inaccurate research citations that were self-promoting include six articles in journals that coders could not locate, or unverified journals, and 72 articles they could not find in verified journals. There were 31 books and book chapters that they could not find and 24 forthcoming articles they could not find in verified journals.

Coders also counted four instances of authorship insertion, 27 instances of authorship promotion and another 27 instances of authorship omission. Authorship insertion involves applicants listing themselves as authors on a publication on which they were not actually included. Promotion is applicants inflating their contribution by bumping themselves up on the list of authors. Omission, meanwhile, is applicants leaving out other authors listed on the publication itself.

Source: Trisha Phillips

Why It Happens

What could be motivating this behavior? Phillips said that just as the publish-or-perish dynamic on the tenure track can encourage the big three of deliberate misconduct (data fabrication, falsification or plagiarism) or other detrimental research practices, the tight job market may encourage CV embellishment.

“This likelihood of getting caught is low, and the rewards for getting away with it is high,” Phillips added.

And while a tight job market means that hiring committees don’t have time to play sleuth with scores of applicants’ CVs, she said, they should probably fact-check their top candidates’ publication records.

“I’ve been on a hiring committee where [we] got 150 applications, and I never would have fact-checked all of them, or recommend that,” she said. “But by the time you’ve narrowed it down to two or three, I’d say that most search committees should take a look and then go back to the longer list if someone gets disqualified.”

If you’ve been on hiring committees and haven’t been verifying CVs, don’t be too hard on yourself. Phillips said that up until now, “there’s been a sense that this isn’t a problem in higher ed, and [it] doesn’t really occur to people that this kind of checking needs to be done.”

Even so, there have been some high-profile incidences of fudging CVs. Anoop Shankar, a former professor of community medicine at West Virginia, was accused of falsifying his credentials to get grants. He also was accused of lying to immigration authorities, improperly using his West Virginia purchasing card and forging professors’ signatures on fake recommendation letters, according to the Pittsburgh Post-Gazette. Federal authorities sought his extradition to the U.S. last year, when he was believed to have traveled to India from the United Arab Emirates.

Phillips said that case and two of her research team members’ own encounters with misleading CVs led them to wonder just how common the phenomenon was in academe, as opposed to other work environments. A literature search found what Phillips called “surprisingly high rates” of misrepresentation in applications for residency and fellowship programs -- but nothing on non-health science programs.

And so they began collecting and eventually analyzing their CVs from non-health science faculty searches across the unnamed, land-grant doctoral university in 2015-16. Coders were particularly interested in reported publications, by type and status: article, book or book chapter, published or forthcoming.

The verification process for journal articles had five parts, starting with article title and author name searches in Google Scholar; Academic Search Premier, an aggregated database available through the university library; and Google. If that failed, the coders also searched for the journal website. For books and book chapters, coders used essentially the same process.

Next, the coders categorized the errors by type, namely unverified journal, unverified article, unverified book and book chapter, unverified forthcoming article, authorship insertion, authorship promotion and authorship omission. They also noted errors that were not self-promoting, such as incorrect titles, journals and publication dates.

A Matter of Trust

Most of the sample involved applications for assistant professorships (150), along with visiting assistant professorships (21), postdoctoral fellowships (four), associate and senior professorships (two each) and a chair’s position (one).

The 141 applicants who said they were authors listed between one and 77 publications each, with an average of eight. The average number of publications listed by entry-level applicants (to postdoc, visiting and assistant professor positions) was 6.7. Midlevel and senior faculty applicants reported an average of 34 publications each, across a variety of fields.

The number of unverified or inaccurate citations per author ranged from one to 17, with an average of 2.4. Of these 79 authors, 35 had one unverified or inaccurate research citation, and 44 had two or more. The percentage of unverified or inaccurate research citations per author ranged from 3.9 to 100, with an average of 40.3.

Early-career authors were no more likely to have errors than advanced scholars.

The paper lists a number of limitations, including that it’s possible that some articles listed as pending really are still waiting to be published somewhere.

Yet it says that given the increasing popularity of online preprints and first views for journals with serious backlogs, the margin of error is probably low. And even if the analysis dropped forthcoming articles, it says, falsification would change only slightly, from 17 percent of publications to 16 percent.

Phillips and her colleagues published their paper in the Journal of Empirical Research on Human Research Ethics. They found the money to publish it open access, to raise more awareness of the issue. And as the paper says, the issue is one of trust.

“In the increasingly social world of science, researchers need to trust their collaborators and other scholars at nearly every point of the research process, including literature reviews, data collection, data analysis, manuscript preparation and peer review,” it says. Yet evidence “suggests that this trust might not be well placed.”