You have /5 articles left.

Sign up for a free account or log in.

filo/digitalvision vectors/getty images

In an excellent column, Ray Schroeder, senior fellow for the Association of Leaders in Online and Professional Education, laments the tendency for many instructors to rely on text-specific test banks as source material for student assessment. Not only are these inquiries susceptible to cheating, he says, they assess lower-order, nonlocalized and therefore less relevant knowledge like names and dates.

Schroeder praises Grant Wiggins’s work on authentic assessment as a preferable standard for evaluation design. Authentic approaches require judgment and innovation, asking students to proactively apply knowledge and skills in realistic contexts. “There is no shortcut to demonstrating that you can apply what you have learned to a unique, newly shared situation,” Schroeder asserts. I could not agree more. Authenticity is key.

But here is where things get tricky: Schroeder states, “Clearly, authentic assessments are never a multiple choice of a, b, c, d or true/false exams.” There is certainly some truth to this. Multiple-choice assessments are, indeed, limited.

At the same time, many instructors, particularly those at teaching-intensive institutions or those leading high-enrollment courses, do not have the time to commit to more rigorous assessment exercises. I have discussed this issue with dozens of colleagues at conferences, as well as during numerous iterations of an online teaching institute I co-facilitate at my university. Multiple-choice assessments, many argue, are logistically necessary.

Students like them, too. I conducted a survey of over 1,400 college students in January 2021 and asked, among other things, what types of assessments they find work well in online and remote learning environments. Multiple-choice quizzes rated higher than any other instrument, including various forms of writing, research projects and presentations.

There are two explanations for this pattern. The first concerns academic integrity: multiple-choice assessments may simply be easier because students have access to the internet in non-face-to-face courses. (Proctoring services may mediate some cheating, but surveilling students during tests creates new challenges.) The second reason is that multiple-choice assessments are cognitively unambiguous and may provoke less anxiety when students do not have access to an instructor to raise points of clarification. Students have told me both explanations are valid.

How might we resolve this dilemma? Authentic assessments are widely viewed as pedagogically superior, yet multiple-choice assessments are often preferable to instructors and students alike. The solution, in some cases at least, is to rethink the premise that multiple-choice questions cannot meet the standards of authentic assessment. What if they could?

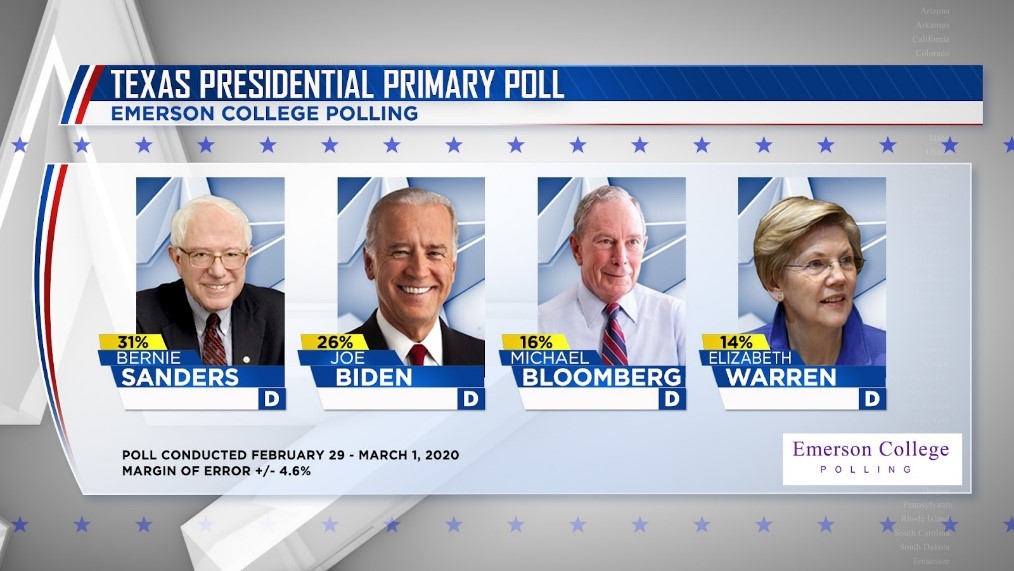

Consider this example: I regularly teach political science research methods, and one goal in that course is that students develop technological literacy skills that will help them navigate the often-sticky world of data in politics. In pursuit of this goal, we study the science of sampling and the uncertainties it produces. It is vital that students can interpret the substantive meaning of a poll they see in the news media after factoring in various elements, such as its margin of error.

If I were to ask you, “What is the standard margin of error in a political poll?” in an online multiple-choice quiz, what would you do? If you did not know the answer, chances are you would copy that question into a search browser, or at least be tempted to do so if using the internet violated the terms of the assessment. I encourage you to take a moment and google the question above. Not only will you find dozens of pages that contain the answer, you may well see the answer in numerous page previews as you scroll down the screen.

This is a poorly designed multiple-choice question, one that falls short of the authentic standard described above. Not only is it easily searchable online, but the knowledge it assesses is not even that useful. It is the application, not the recognition, of a poll’s margin of error that really matters. That is the skill I seek to cultivate in my students.

So what if I did the following instead: on a quiz, I provide students with an actual poll, and ask them to assume the role of a newspaper editor selecting a headline for a story about it.

Which newspaper headline most accurately describes the state of the Democratic primary race in Texas?

Which newspaper headline most accurately describes the state of the Democratic primary race in Texas?

- “Sanders Polling Far Ahead of All Candidates Ahead of Texas Election”

- “Sanders and Biden Lead the Field, but No Clear Frontrunner”

- “Bloomberg’s Poor Poll Performance Shows Money Does Not Matter in Primaries”

- “Warren Least Likely to Be Democratic Party Nominee in 2020”

- “Inconclusive Polls Suggest Texans Split Evenly Among Candidates”

This question is superior in several ways. First, it is not particularly hackable. A test taker could not easily look up an answer to this question online. More importantly, the question is also much more authentic. It places students in a real-world context, asks them to engage in an important civic exercise and provides a basis for evaluating their ability to apply knowledge related to sampling and poll interpretation.

The same process can be applied to all sorts of content. My political science students respond to multiple-choice questions on a range of topics, such as determining which ballot-counting system would most benefit a particular candidate given a set of voters’ preferences, advising a donor how they should spend their money based on campaign finance laws and judging whether a person’s speech is protected by the First Amendment.

To be clear, these higher-order multiple-choice questions are not a panacea. There are certain features of authentic assessment, like integrating feedback or refining a product, for which they are not well suited. For instance, I would not use this method to assess students in a senior capstone course charged with recommending changes to the U.S. Constitution. More generally, I do not advise instructors to rely only on multiple-choice quizzes -- or any singular method -- when assessing student progress.

Yet we should not dismiss them entirely, either. The realities of higher education for many instructors and students render multiple-choice assessments a necessity, or at least very helpful under some circumstances. It does not need to be an either-or proposition. Instructors can upgrade -- or, better yet, bypass -- conventional test-bank multiple-choice items. In doing so, we simultaneously increase the relevance of these assessments and promote academic integrity. Higher-order multiple-choice assessments can be instruments on the assessment tool belt, along with other implements. Just be sure to make all of them authentic.