You have /5 articles left.

Sign up for a free account or log in.

The idea that the adoption of digital instructional technologies will lag without faculty buy-in is becoming widely accepted by college administrators and (smart) vendors alike. So, too, is the reality that professors are unlikely to buy in unless they can be persuaded that a particular piece of software or digital course content will help them teach, their students learn, or both.

But with vendors pitching a dizzying array of software tools and approaches -- many of which sound alike and promise the moon -- how might colleges that might want to build support among faculty members and others go about educating them about the options and giving them information with which to make decisions?

That's the premise of the Courseware in Context Framework developed jointly by the Online Learning Consortium and Tyton Partners, an investment banking and strategy consulting firm, with support from the Bill & Melinda Gates Foundation. The framework grew from a survey Tyton conducted in 2014 (reinforced by a new study released this month) showing that faculty members were widely dissatisfied with the learning management systems and other courseware they were using to deliver their courses digitally.

The study also found that to the extent faculty members were slow to adopt new technologically enabled approaches to teaching, "one of the biggest barriers to adoption was the lack of time [they] have to discover, evaluate and then think about approaches to redesigning their courses, in response to their desire to improve them," said Gates Bryant, a partner in Tyton's strategy consulting practice. Skepticism about the efficacy of such tools was also frequently cited.

As part of Tyton's Gates Foundation-supported work to produce information that can help colleges and universities use technology to improve student outcomes, the organizations discussed the possibility of creating "some framework for evaluating quality in the courseware arena," Bryant said. Gates officials originally asked if a "quality seal" was possible, a way to "draw a line in the sand about what's quality or not" in digital courseware.

It quickly became apparent to the members of a working group that Tyton and the Online Learning Consortium assembled that creating something so rigid was neither possible or desirable.

"What may be good in one context may not be a good tool in another," Bryant said. "The key thing an institution or professor needs to do is to sort through the context of one's own situation and then discern what features of a courseware product are most valuable in that context."

The framework (referred to, in shorthand, as CWiC) is designed to be used by teams of faculty members, administrators, instructional designers or others trying to decide what courseware tool to use for a particular course, and to help "cut through the marketing noise" that is abundant in the ed tech market place. (It expressly does not address the content itself, which is fully in the faculty's domain.) Courseware can include publisher-owned tools such as McGraw-Hill's ALEKS or Cengage's Learning Objects, OER platforms such as OpenStax or Lumen, or freestanding platforms such as Smart Sparrow or Acrobatiq.

"When a product claims to be adaptive," for instance, "the framework can help you discern how adaptive they are and in what way," Bryant said. "It is designed to give faculty members the 5-6 questions they're going to want to ask if they're evaluating a piece of courseware."

The framework, which currently can be used through portals on EdSurge and LearnPlatform, has multiple parts, designed for different needs: a "product primer," for instance, is for those trying to figure out at a basic level the capabilities of different sorts of tools (is the courseware "adaptive" so that different learners see different materials based on their knowledge level? how usable is it? how much assessment measurement does it provide?) and to begin to judge which capabilities are most important in their situation.

Other, more sophisticated rubrics are designed to help instructional designers more deeply analyze particular products, and institutions assess the success of course-level and institution-level implementations.

Putting the Framework to Use

Tyton and OLC are working to test and expand the use of the framework by institutions and instructors. Three members of the CWiC project's executive committee are piloting the framework at their institutions, and participants in the Association of Public and Land-grant Universities' Personalized Learning Consortium are test-driving the framework to varying degrees as they undertake experiments to adaptive learning.

Timothy Renick, vice provost and vice president for enrollment management and student success at Georgia State University, one of those APLU institutions, said the decision to use the CWiC framework flowed from the realization that new instructional approaches like adaptive learning can't be "imposed from the top down." The university had been experimenting for a few years with adaptive learning in its mathematics courses, with positive results.

But as the institution decided to try to expand the reach of adaptive learning into key courses in other disciplines, "we realized this was not something we can orchestrate from the central offices," Renick said. "It has to come through organic growth."

A grant from APLU (with money provided by Gates) helped Georgia State create a process in which various social science departments would explore different approaches to adaptive learning. Renick said the CWiC framework gave departments better resources to "understand what options are out there, in a way that is manageable and not overwhelming."

Shelby Frost, a clinical associate professor in Georgia State's department of economics, is leading that department's adaptive learning effort. She said the CWiC framework was used mostly by the employees of the Center for Excellence in Teaching and Learning, which ran the various vendors through the framework and gave the professors a spreadsheet analyzing how they fared.

Those that passed muster were then invited to campus to present their products to the participating professors, and "that's where the real substance of the analysis happened," said Frost, who describes herself as "kind of a techno-geek." "The framework helps to sort through the options, which are overwhelming, even for someone like me."

Bryant of Tyton doesn't argue with that assessment. "Our ultimate goal is to equip and arm the faculty to be good consumers, choosy consumers, when it comes to digital courseware," he said.

The Skeptic's Point of View

The faculty members likeliest to use an analytic tool like the CWiC framework are those who believe in the potential power of digital courseware and are trying to decide which tool (from which provider) to use.

But what about the many professors who are doubters -- not just those who would prefer never to leave behind their chalk and chalkboards, but even instructors who may believe that digital technology has promise but want to know not just how the tools work but what their values are?

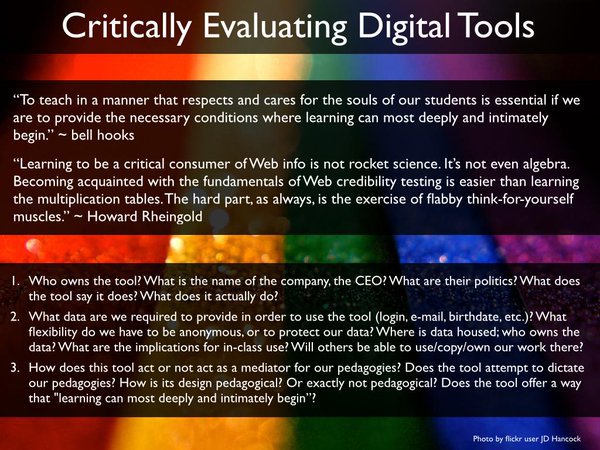

This month, in a post on Hybrid Pedagogy that generated significant interest (and a story on Inside Higher Ed), Sean Michael Morris and Jesse Stommel largely eviscerated the anti-plagiarism tool Turnitin. They ran Turnitin through their own rubric for critically evaluating digital tools (see below), which they describe as a "crap detection" exercise that leads faculty members to ask hard questions and engage in critical thinking about the products they're considering using.

The questions, as you can see, are very different from those framed by the CWiC framework, focused on such issues as the privacy of student data, how much freedom it grants the instructor, and even the politics of the tool's producer.

In an interview, Stommel and Morris discussed the differences between their analysis and CWiC. Stommel admitted to an immediate skepticism about CWiC because Tyton works closely with companies and Gates has a history of dismissing the importance of professors in promoting innovation in higher education.

They said they thought that running digital tools through the CWiC framework wouldn't prompt instructors to engage in the "introspective and reflective work" that Morris said professors should do before adopting new technologies.

"There are a lot of assumptions built into their framework about what education is and how it works, assumptions that we work to question all the time," said Morris, an instructional designer in the office of digital learning at Middlebury College.

Added Stommel: "This seems set up to just pop a tool into their matrix and come out with an answer, which leaves out the critical thinking piece that we think is so important. When we do our activity, faculty typically end up with more questions than answers at the end."

Still, as the interview progressed, Morris envisioned a scenario where the CWiC framework could work in tandem with their own. "I could see a teacher applying this framework to a piece of courseware and saying, 'This tool seems like it would work for me in my situation,' " he said. "And then to say, 'now I have something to run through these questions,' " referring to those framed in their own rubric.

Combined, he said, "that would put so much agency in the teacher's hands."