You have /5 articles left.

Sign up for a free account or log in.

How Elsevier calculates a journal's CiteScore, a new way to measure impact of research.

Elsevier

Does higher education need another ranking?

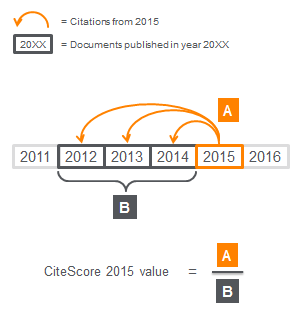

For Elsevier, the answer is yes. Last week, the publisher launched CiteScore, a set of metrics that measure a scholarly journal’s impact by looking at the average number of citations per item it receives over a three-year period. The metrics cover the more than 22,500 journals indexed in Scopus, Elsevier’s citation database.

Researchers and publishers will ultimately be the ones to decide whether CiteScore gains traction. And as is often the case when Elsevier launches a new product, some in academe are approaching CiteScore with skepticism.

Lisa H. Colledge, director of research metrics at Elsevier, discussed the thought process behind CiteScore and the reaction to the launch in an interview with Inside Higher Ed. She said that despite the world of scholarly communication’s “love-hate relationship” with rankings, impact metrics aren’t going away.

“Regardless of what you may think about the ongoing activity of ranking, people are still interested in using new data sets and developing new metrics in looking at different ways of how things can be excellent,” Colledge said. “We’re doing our best to answer that need.”

The CiteScore metrics are only part of what the company calls its “basket of metrics.” At the moment, it also includes metrics such as SCImago Journal Rank, which looks at weighted citations, and SNIP, short for Source Normalized Impact per Paper, but it is expected to expand.

The company is conducting a survey to gauge levels of interest in other metrics that it is considering adding to the basket, including metrics that look at collaboration, commercial use, funding, mentorship and the societal impact of research, among others. Elsevier also plans to add alternative metrics and usage metrics, and is exploring whether it can quantify something such as the quality of a journal’s peer-review process.

Despite Elsevier’s emphasis on offering multiple metrics, the top-level CiteScore has attracted most of the attention following last week’s launch. First came an analysis from Nature, showing that a high impact factor didn’t necessarily translate to a high CiteScore. Prestigious journals such as the Journal of the American Medical Association, The Lancet and the New England Journal of Medicine were some of the examples cited.

Then, Carl T. Bergstrom and Jevin D. West, the two University of Washington researchers behind the Eigenfactor Project, released findings showing that journals published by the Nature Publishing Group, a major science publisher owned by Elsevier competitor Springer Nature, scored about 40 percent lower using Elsevier’s metrics than their Journal Impact Factors (a popular metric for measuring journal impact calculated by Clarivate Analytics) would suggest. The researchers compared the CiteScore and Journal Impact Factor results for 9,527 journals for their analysis.

A spokesperson for the Nature Publishing Group declined to comment for this article.

Bergstrom and West’s follow-up findings -- which critics of Elsevier eagerly seized on -- showed Elsevier’s journals getting a 25 percent boost compared to what one might expect from looking at the journals’ impact factors. As the researchers expanded their analysis to compare the scores to those of other major publishers’ journals, however, the gap narrowed. On Monday, the researchers said they needed to do further analyses before committing to the conclusion that Elsevier’s journals benefit from using the CiteScore metrics.

“The big story is that this is just an absolute disaster for Nature journals,” Bergstrom said in an interview. “We also see that Elsevier benefits, but the question at this point is how much of that benefit is coming exclusively at the expense of Nature versus how much they are further singling themselves out relative to other publishers.”

Elsevier, perhaps in anticipation of concerns about bias, has been open about its methodology (which in turn has enabled analyses such as the one by Bergstrom and West). The scores are also available for free, and Scopus members can see how the scores are calculated.

Compared to the Journal Impact Factor, CiteScore includes more journals (about 22,500 versus 11,000 indexed in Web of Science), has a larger observation window (three years versus two) and counts all document types -- not distinguishing between editorials, letters or peer-reviewed articles. The decision was an effort to remove the “judgment call” that goes into determining which document types should be included in the calculation, Colledge said.

“We hope that people will use the information that we have put out there for free and made as transparent as we can in a fair way to really investigate their concerns and conclude that they can see there is no problem,” Colledge said. “These metrics are just metrics, after all.”

While researchers applauded Elsevier for making the metrics transparent, some critics said CiteScore presents a larger question about conflicts of interest.

Even Bergstrom and West, while they said they “don’t see any reason to believe at this point they [Elsevier] are setting up a rigged system or cheating in some way,” described themselves as “uncomfortable” with the idea that Elsevier, a billion-dollar company whose thousands of books, journals and digital services help shape the world of scientific, technical and medical publishing, owns a set of metrics evaluating the impact of journals.

Bergstrom explained the conflict using an analogy: “If Ford bought Kelley Blue Book, we wouldn’t really believe Kelley Blue Book as much,” he said.

Ellen Finnie, head of scholarly communications and collections strategy at the MIT Libraries, made a similar argument in a blog post. “Having a for-profit entity that is also a journal publisher in charge of a journal publication metric creates a conflict of interest and is inherently problematic,” she wrote.

William Gunn, Elsevier’s director of scholarly communications, responded to the post on Twitter, again pointing to the company’s openness concerning how the score is calculated.

“This is where I get to point happily to the Scopus team using openness and transparency to their advantage,” he wrote. “Don't trust, check!”

Roger C. Schonfeld, director of the libraries and scholarly communication program at Ithaka S+R, responded to Gunn with a comment that a commitment to transparency doesn’t fundamentally address the issue.

“Openness and transparency about the metric and its calculation are not antidotes to the underlying conflict of interest,” Schonfeld wrote. The ensuing back-and-forth shows the two remained on different pages.

In an interview, Schonfeld said he was asking a question that is not related to how the CiteScore metrics work.

“I actually don’t think it matters whether the metrics benefit Elsevier or not,” Schonfeld said. “To my mind, the question is very, very straightforward: Is there a conflict of interest if a journal publisher controls a metric by which journals are assessed?”

On top of that question, there is a long-running debate about the value of journal metrics. In her blog post, Finnie argued that the emphasis on journal prestige has a negative impact on scholarly publishing -- a common argument against journal quality metrics -- and urged academe to pay more attention to article-level and alternative metrics for judging the impact of scholarship.

“While there is convenience -- and inertia -- in the longstanding practice of using journal citation measures as the key journal quality assessment vehicle, article-level and alternative metrics provide a needed complement to traditional citation analytics, and support flexible, relevant, real-time approaches to evaluating the impact of research,” Finnie wrote. “Our dollars and our time would seem to be well spent focusing on these innovations, and moving beyond journal citation-based quality measures as a proxy for article quality and impact.”

Colledge said Elsevier isn’t investing in journal metrics at the expense of article-level or other types of metrics, but that its research has shown that there was an appetite among researchers for more ways to judge the impact of journals. She also stressed two “golden rules” of using research metrics: first, combine metrics with qualitative input; second, never use just one metric.

“Whichever metric it is, it’s never good enough,” Colledge said. “They all have weaknesses.”