You have /5 articles left.

Sign up for a free account or log in.

Greetings from Chicago where I'm about to start a meeting at the Federal Reserve Bank of Chicago on Mobilizing Higher Education to Support Regional Innovation and A Knowledge-Driven Economy. The main objective of meeting here is to explore a possible higher education-focused follow-up to the OECD's Territorial Review: the Chicago Tri-State Metro Area. I'll develop an entry about this fascinating topic in the new future.

Before I head out to get my wake-up coffee, though, I wanted to alert you to a 'hot-off the press' report by Toronto-based Higher Education Strategy Associates (HESA). The report can be downloaded here in PDF format, and I've pasted in the quasi-press release below which just arrived in my email InBox.

Before I head out to get my wake-up coffee, though, I wanted to alert you to a 'hot-off the press' report by Toronto-based Higher Education Strategy Associates (HESA). The report can be downloaded here in PDF format, and I've pasted in the quasi-press release below which just arrived in my email InBox.

More food for fodder on the rankings debate, and sure to interest Canadian higher ed & research people, not to mention their international partners (current & prospective).

Kris Olds

>>>>>>

Research Rankings

August 28, 2012

Alex Usher

Today, we at HESA are releasing our brand new Canadian Research Rankings. We're pretty proud of what we've accomplished here, so let me tell you a bit about them.

Unlike previous Canadian research rankings conducted by Research InfoSource, these aren't simply about raw money and publication totals. As we've already seen, those measures tend to privilege strength in some disciplines (the high-citation, high-cost ones) more than others. Institutions which are good in low-citation, low-cost disciplines simply never get recognized in these schemes.

Our rankings get around this problem by field-normalizing all results by discipline. We measure institutions' current research strength through granting council award data, and we measure the depth of their academic capital ("deposits of erudition," if you will) through use of the H-index, (which, if you'll recall, we used back in the spring to look at top academic disciplines). In both cases, we determine the national average of grants and H-indexes in every discipline, and then adjust each individual researcher's and department's scores to be a function of that average.

(Well, not quite all disciplines. We don't do medicine because it's sometimes awfully hard to tell who is staff and who is not, given the blurry lines between universities and hospitals.)

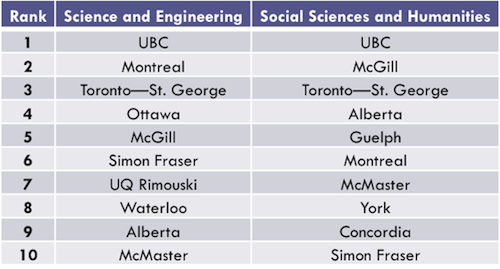

Our methods help to correct some of the field biases of normal research rankings. But to make things even less biased, we separate out performance in SSHRC-funded disciplines and NSERC-funded disciplines, so as to better examine strengths and weaknesses in each of these areas. But, it turns out, strength in one is substantially correlated with strength in the other. In fact, the top university in both areas is the same: the University of British Columbia (a round of applause, if you please).

I hope you'll read the full report, but just to give you a taste, here's our top ten for SSHRC and NSERC disciplines.

Eyebrows furrowed because of Rimouski? Get over your preconceptions that research strength is a function of size. Though that's usually the case, small institutions with high average faculty productivity can occasionally look pretty good as well.

More tomorrow.