You have /5 articles left.

Sign up for a free account or log in.

Professors love to hate grade inflation, saying course marks aren’t as meaningful as they used to be. A new paper makes the case that easy grading is actually a symptom of poor assessment practices rather than a cause and that, either way, reducing leniency in grading may lead to more accurate assessment.

“The strong association between grading leniency and reduced grading reliability … calls for interpretations that go beyond the effect of restricting grades to fewer categories,” reads the paper, now available online in Studies in Higher Education. “One possible explanation is that grading leniency is the result, rather than the cause, of low grading reliability. Consider faculty members who suspect that their assessment methods are unreliable. This could occur in course subjects in which assessment of student performance requires subjective and complex judgment.”

Less “flattering reasons” for low grading reliability include “badly designed or poorly executed assessments,” the study continues. “Increasing grading leniency as a compensating mechanism for low grading reliability can be rationalized as an ethical behavior because it avoids assigning bad grades to good students. It is also a prudent strategy because, though students may accept high and unreliable grades, they might begrudge low and unreliable ones.”

“The Relationship Between Grading Leniency and Grading Reliability” is based on a data set pertaining to 53,460 courses taught at one unnamed North American university over several years. All sections included 15 or more students with passing grades, and failing grades were tossed out of the analysis to avoid any biasing effect on average grades. The primary focus was whether grades were reliable measures and whether they were lenient. Results suggest they're often neither, though there was plenty of consistent grading.

A leniency score was computed for each section as the “grade lift metric,” or the difference between the average grade a class earned and the average grade point average of the class’s students at the end of the semester. So if a course section’s average grade was B, but the students’ average GPA was 3.5, then the “lift” score was -0.5, indicating tough grading. A positive score indicated lenient grading.

“The core idea is that high grading reliability within a department should result in course grades that correlate highly with each student’s GPA,” reads the study, written by Ido Millet, a professor of business at Pennsylvania State University at Erie.

Course section grading reliability scores were computed based on the same logic. So, in an extreme example, a section in which high-GPA students received low grades and low-GPA students received high grades earned a low reliability score.

Grading reliability averaged 0.62, meaning that in most cases better students received better grades.

Grading leniency, meanwhile, ranged between a minimum of -1.36 and a maximum of 1.51. “These are extreme values, considering that a grade lift of -1.36 is equivalent to a class of straight-A (4.0 GPA) students receiving average grades slightly below a B-minus (2.67),” the study says. “Similarly, a grade lift of 1.36 is equivalent to a class of C-plus (2.33 GPA) students receiving average grades above an A-minus (3.67).”

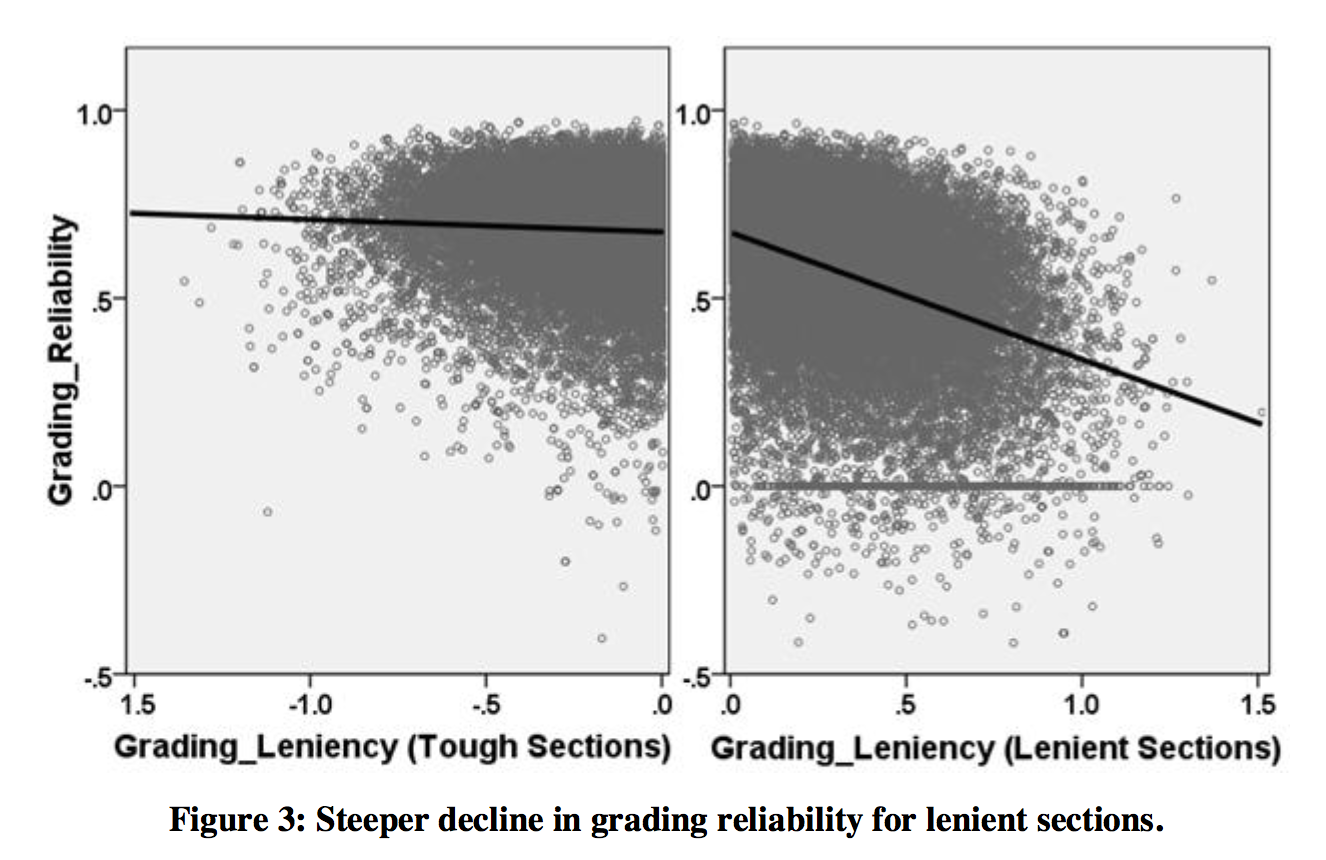

Millet also compared grading reliability in sections with lenient grading (positive grade lift) with sections with tough grading (negative or no grade lift). Data indicated that reliability for tough graders was higher.

Over all, “grading leniency is associated with reduced grading reliability (p < 0.001),” he wrote. “This association strengthens as grading moves from tough to lenient. The standardized slope coefficient changes from -0.04 to -0.42, indicating that the decline in grading reliability associated with a one-unit increase in grading leniency is approximately 10 times larger among lenient-grading sections.”

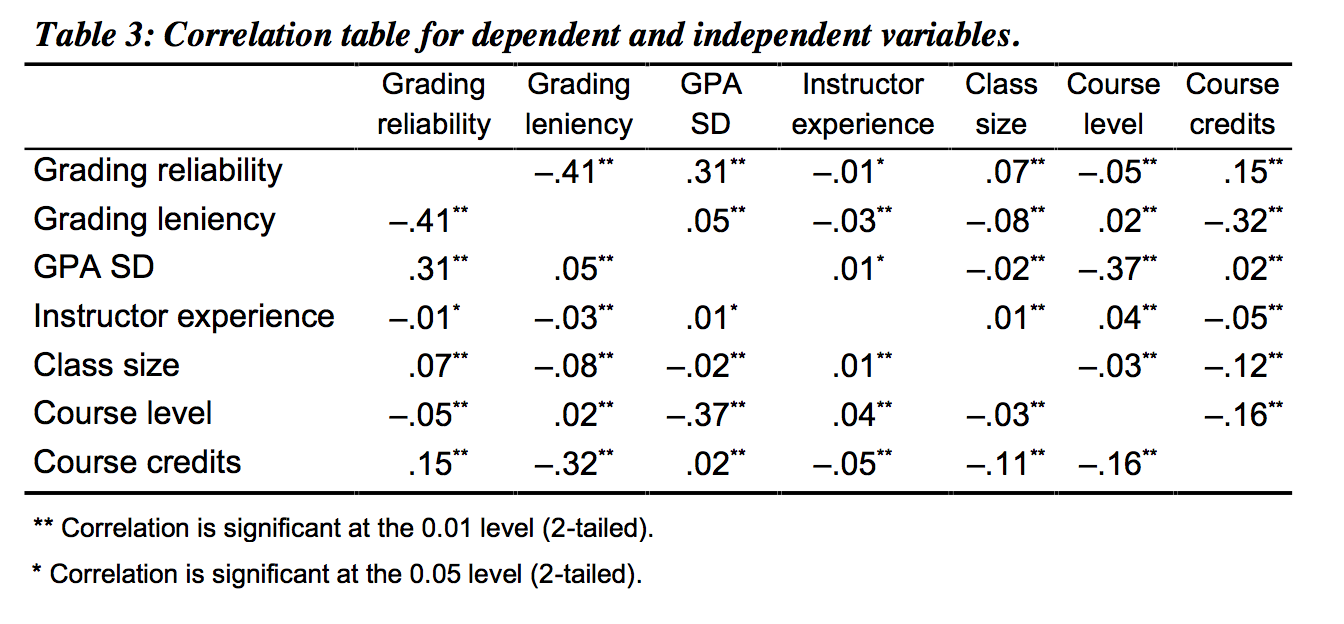

To isolate the effect of factors other than leniency on grading reliability, Millet included several independent variables in the analysis: standard deviation of GPA within each course section, class size, instructor experience, course level and number of credits. Reliability positively correlated with the standard deviation of GPA within each course section, and with and course credits -- probably because a four-credit course provides more opportunities for student-professor interaction. There was a low and negative correlation between instructor experience and grading reliability, and classes with more students had higher reliability. There was lower reliability in upper-level courses, probably because of the relative homogeneity of students.

But even after accounting for the effects of other variables, grading leniency still had a significant negative association with grading reliability, according to the study.

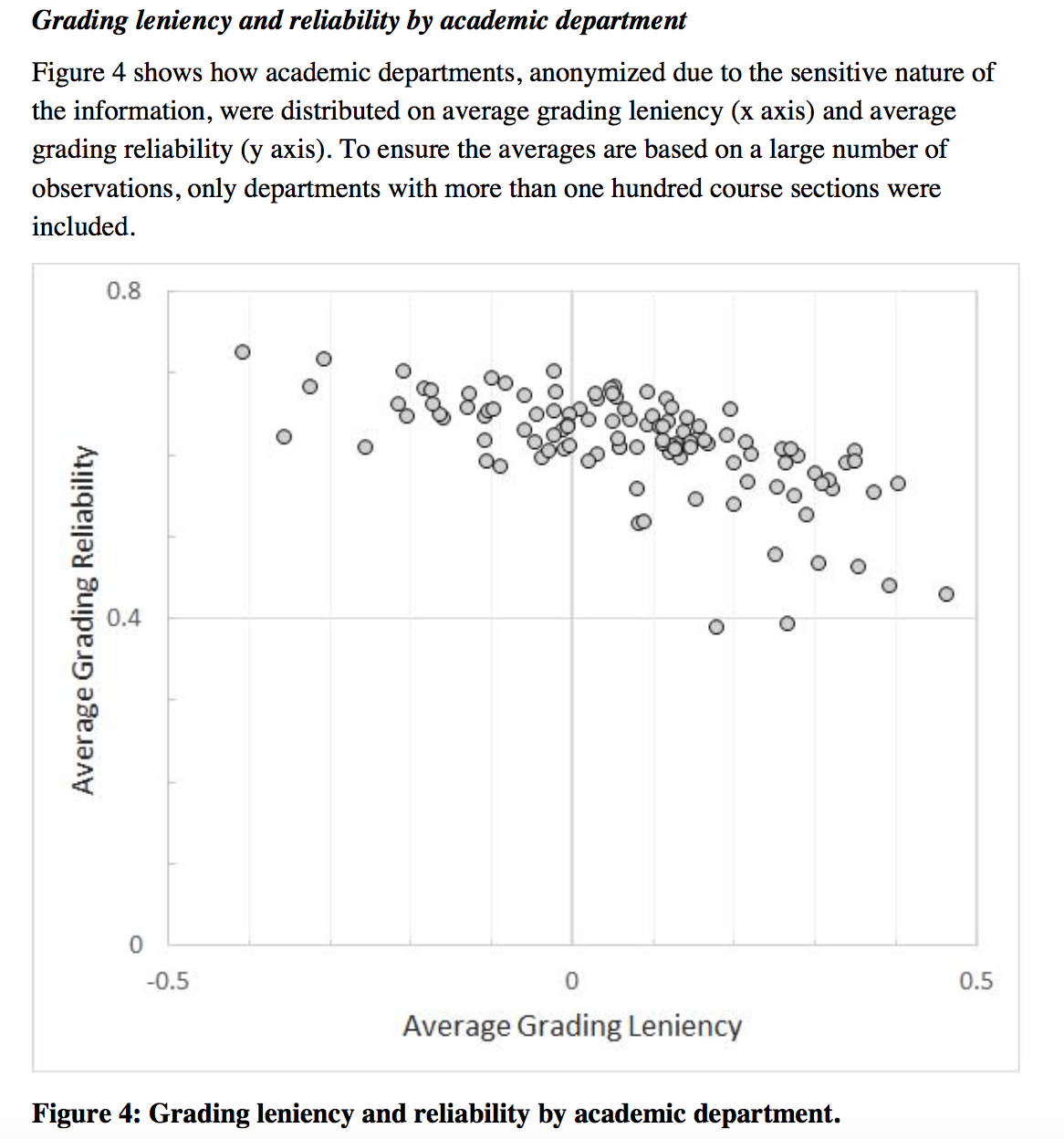

Similar to results for individual course sections, the decline in grading reliability was more pronounced among departments with lenient grading (departments were anonymized in the study).

Another noteworthy finding is that variance in students’ GPA is “a strong contributor to grading reliability in lenient- as well as tough-grading course sections,” Millet says. This may also explain the “weak results” past studies have found about the relationship between grade inflation and grading reliability, Millet says, since the effect of increasing grading leniency over several decades “may have been moderated by a concurrent increase in variability of students’ abilities.”

The study notes several limitations, including that GPA is only a “proxy” for expected performance and that replications using data from other institutions are needed. Yet Millet argues it has several important implications for higher education, such as that future studies of grading reliability should incorporate measurements of variability in students’ abilities, and that grading reliability should be incorporated as an independent variable when higher grades lead to higher scores on student evaluations of teaching.

That’s because “students may accept high and unreliable grades, but they might resent low and unreliable ones,” the study says. “This may help resolve the leniency versus validity debate. If the correlation between grading leniency and [evaluation] scores is particularly strong for lenient graders with low grading reliability, this would mean that the leniency hypothesis is correct. In such a case, rather than simply ‘buying’ student satisfaction, the effect of higher grades may be interpreted as avoiding student dissatisfaction when grading reliability is low.”

Millet has previously suggested that giving instructors information about how lenient their grading is compared to their peers’ can significantly reduce variability in grading leniency, and he says that future research may extend the same approach, to see whether this also leads to increased grading reliability.

“The rationale for this hypothesis is that, as institutional norms for grading leniency become visible, extremely lenient graders may become less lenient,” the study says. “This may force such instructors to become more reliable in order to avoid student dissatisfaction.”

At the same time, he says, administrators should “resist” the temptation to use the grading reliability metric to evaluate faculty members. Why? “Such heavy-handed use of this imperfect metric can lead to unintended consequences. For example, faculty members who are concerned about their grading reliability scores might resort to assigning good grades to students with high GPAs and low grades to students with low GPAs.”

Millet also says it would have been useful to include in this study metrics related to the number and type of assessments employed by each course section. Such data can be useful for internal diagnostics. Typical learning management systems already collect limited data about assessments, and by “adding a few more attributes to characterize each assessment, useful reports could be generated to establish and detect deviations from institutional norms.”

He reiterated in an interview that “the main issue here is that grading leniency may be a symptom, rather than a cause, of low grading reliability.” It’s possible that when professors “suspect they have low reliability in the way they grade, they compensate with grading leniency.”

While grade inflation typically gets a lot of attention, Millet said, “what we need to address, and set up some reporting system for, is grading reliability. One of the ways of doing that is to collect data on grading leniency, assessment types and assessment scope for individual course sections. And collecting data about assessments can be facilitated by learning management systems.”