You have /5 articles left.

Sign up for a free account or log in.

Rubrics appeared to help fight gender bias in hiring at one engineering department.

Susan Vineyard/iStock/Getty Images Plus

A new in-depth case study in Science finds that faculty hiring rubrics—also called criterion checklists or evaluation tools—helped mitigate gender bias in these decisions. At the same time, researchers found evidence that substantial gender bias persisted in some rubric scoring categories and evaluators’ written comments.

While rubrics proved imperfect, the paper doesn’t recommend abandoning them. Rather, it urges academic units to employ rubrics as a “department self-study tool within the context of a holistic evaluation of semifinalist candidates.”

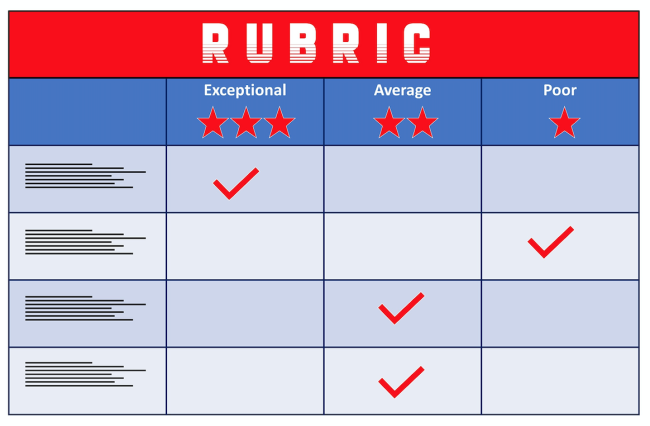

The case study centers on an engineering department at an unnamed research-intensive university. Researchers worked with the department to adapt a pre-existing rubric template from the National Science Foundation–funded Strategies and Tactics for Recruiting to Improve Diversity and Excellence (STRIDE) program at the University of Michigan. This rubric evaluated faculty candidates across six dimensions: research productivity, research impact, teaching ability, contributions to diversity, potential for collaboration and overall impression. Scores ranged from excellent to poor (4 to 0 on a point-based scale) for each category. Written commentary was also encouraged.

During four faculty search cycles over four years, faculty members in the engineering department used the rubrics to evaluate written materials supporting the job applications of 62 semifinalists: 32 women and 30 men. At the beginning of each faculty meeting focused on selecting semifinalists, a faculty member presented rubric scoring results and commentary. (For these summaries, evaluators were anonymized and inaccurate off-topic comments were filtered out.)

To determine if using rubrics made a difference, the researchers then compared the proportion of women hired during the eight years before rubrics were in use to the share of women hired in the latter four-year period.

The university had adopted interventions to increase diversity, equity and inclusion before the engineering department added rubrics. These included diversity training for search committee members and adding contribution-to-diversity statements to application files. Even so, the department in the earlier eight-year period hired eight men and just one woman over eight searches.

In the four-year period involving rubrics, the department hired six men and three women over four searches. The researchers say they can’t attribute this change wholly to rubric use, but they note that the change is significant: “The number of women hired increased from only one per nine hires in Phase One to three per nine hires in Phase Two. The phase with the increased hiring of women coincides with the period when rubrics were used.”

As for how women were rated during the search process, all 62 semifinalist candidates received rubric scores from six to 21 faculty professors, with an average score of 13.5 and a median of 12. There were statistically significant differences in three of the six evaluation categories: women were scored lower than men in research productivity and research impact but higher than men in contributions to diversity.

To see if gender bias was at play in scoring, the researchers examined whether women actually demonstrated lower research productivity, by looking at the number of articles they’d published and their H-indexes (the latter being a measure of researcher output and impact). The authors found that female candidates, on average, received statistically significantly lower productivity rubric scores than those of men, even after controlling for seniority (as measured by number of years since getting their Ph.D.) and number of articles published. Women also received significantly lower scores on average than men while controlling for seniority and H-index. Women’s productivity rubric scores were consistently below those of men with the same number of published articles and seniority, with women facing an average penalty of 0.36 points. Measured another way, the paper says female candidates face an 18 percent penalty for being a woman. At the lowest tail of the H-index distribution, men received research productivity rubric scores that were 0.7 points higher than women’s with the same seniority. This equated to an approximately 35 percent penalty for being a woman. “Thus, rubric scoring alone did not appear to fully mitigate gender bias,” the paper says.

In an analysis of written rubric comments, 86 percent of men but only 63 percent of women candidates received at least one positive comment. Men were half as likely to receive a negative comment compared with women. And men were 3.5 times more likely to receive “standout” language (32 percent) compared with women (9 percent). Thirteen percent of women and 25 percent of men received a doubt-raising comment.

‘How Well They Actually Work’

The researchers surveyed the engineering faculty members at the end of their study. Most said that the practice of having summaries of finalists’ rubric scores presented at the beginning of every related meeting prompted attendees to focus more on objective criteria (78 percent) and improved meeting climate (80 percent). The faculty meeting format may also have mitigated gender bias in the research productivity scores and the selection of finalists, as 47 percent of women semifinalists and 37 percent of men semifinalists advanced to the finalist stage.

Co-author Mary Blair-Loy, professor of sociology at the University of California, San Diego, said that while rubrics are widely recommended as a best practice in academic hiring, the new study is (to her knowledge) the first empirical analysis of “how well they actually work in real faculty searches.”

“Rubrics are generally better than no rubrics if they prompt evaluators to slow down and more deliberately consider how well each candidate actually fulfills the previously agreed-upon criteria for the position,” Blair-Loy said. “However, rubrics are not a panacea. As our analysis shows, individual evaluator bias can get smuggled into evaluations in this seemingly objective process.”

While the paper only examines rubrics in hiring for engineering (in which just 18 percent of faculty positions are held by women, according to the study), Blair-Loy said its findings are likely applicable to other fields. Asked if rubrics would help mitigate biases other than gender, such as those related to race, she said it’s “absolutely worth a try. I would encourage departments to try to do this and adjust our policy template accordingly.”

She added that when using rubrics to check any kind of bias, “it’s important to track the results over time. This kind of self-study can help units assess how fairly, in the aggregate, rubrics are being applied and whether they need a course correction.”

Damani White-Lewis, now an assistant professor in the University of Pennsylvania’s Graduate School of Education, wrote a 2020 study on how the concept of faculty fit can perpetuate biases in hiring if it’s not standardized, such as through the use of jointly designed rubrics, and he has a new paper currently under review on the use of rubrics in engineering, psychology and biology.

“We found pretty similar results—that rubrics are overall helpful but do not completely mitigate bias,” White-Lewis said last week, comparing his findings to Blair-Loy’s. “From both a racial equity and gender equity perspective, we found that rubrics did not mitigate cognitive and social biases, or double standards, especially during interviews and conversations about candidates in later stages of the search.”

Rubrics can be helpful for bringing diversity, equity and inclusion matters “to the forefront to act as counterbalances” to other measures that “we know historically reward white male researchers,” he said, but rubrics also “need to be paired in a suite of equity-minded interventions to bring about the most change.”