You have /5 articles left.

Sign up for a free account or log in.

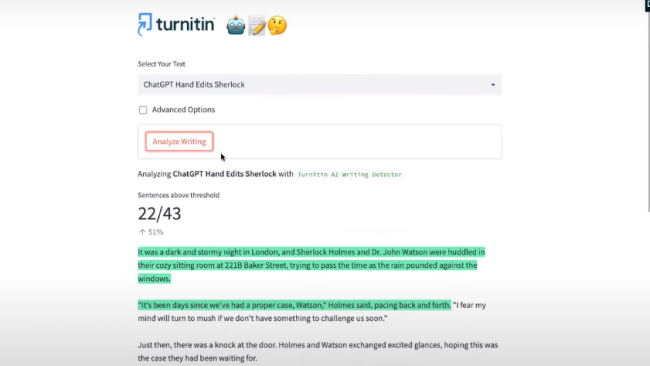

A preview of Turnitin’s new AI detector, Originality, set for a trial release on April 4. Some faculty and technology specialists have raised concerns about the tool’s accuracy and Turnitin’s product rollout.

Screenshot from YouTube

When Turnitin was launched in 1998, the early ed-tech start-up promised a solution to one of the most pressing threats to academic integrity in the nascent internet era: easy plagiarism from online sources.

Twenty-five years later, the question on every classroom instructor’s lips has shifted from “how do I know if my student is copying someone else’s work?” to “how do I know this essay wasn’t written by a robot?”

That question has been asked more frequently, and more frantically, since the March release of the fourth evolution of ChatGPT, the generative artificial intelligence that has demonstrated an uncanny talent for imitating human language and thought. The newest model has already aced the Arizona bar exam and passed a Wharton M.B.A. test.

Tomorrow, Turnitin will release a “preview” of its newly developed AI-detection tool, Originality. In doing so, the company will try to convince its significant subscriber base in higher ed and beyond that it has the solution—or at least an essential piece of the solution—to the latest technological threat to academic integrity.

“Educators have been asking for a tool that can reliably help them detect written material that has likely been written by AI,” a spokesperson for Turnitin wrote in an email to Inside Higher Ed. “Turnitin is answering that call.”

But copy-paste plagiarism and generative AI are birds of radically different feathers. Some faculty members and institutional technology specialists are concerned about the speed of Turnitin’s rollout, as well as aspects of AI-detector technology more broadly.

“We are just beginning to have conversations with instructors about AI-generated writing in multiple contexts, but the sudden and unexpected availability of detection technology significantly shifts the tone and goals of these discussions,” Steven Williams, principal product manager at the Bruin Learn Center of Excellence at the University of California, Los Angeles, wrote in an email to Inside Higher Ed. “Introducing this feature is a major change, but Turnitin’s timeline does not offer sufficient time to prepare technically or pedagogically.”

Other experts say the pace of progress in AI technology will quickly render any marketed solution obsolete. Originality, they fear, may already be behind the times—it wasn’t trained on GPT-4, which was released just last month, nor does it have experience with Bard, Google’s buzzy new generative AI.

“This is a technological arms race. The technology comes out, and of course ed tech wants to come out with the detection, and then GPT is going to update in another few months,” said Michael Mindzak, an assistant professor in the department of educational studies at Brock University. “So [Turnitin’s AI detector] is not really a solution. It’s more of a temporary stopgap.”

A Turnitin spokesperson acknowledged the pace of AI evolution and said the company would work to update Originality frequently.

“This being said, remember that the point of Turnitin’s AI detection is to give educators a starting point for having a conversation,” they said. “We expect that educators will evolve in their thinking as this technology evolves.”

A Rapid Rollout, and No Way to TurnitOff

When Turnitin announced the trial launch of Originality in February, Williams said he immediately had a host of concerns. Chief among them were the inability of faculty and institutions to opt out of the service and the speed at which Originality would be introduced. He was also concerned that institutional partners hadn't been given the chance to review the technology before it was made available to their faculty.

“By placing this feature into our learning environments, it can easily give instructors the impression that it has been vetted by our campus,” he wrote. “This is not the case—our campus privacy, security, accessibility and other teams have not been given any opportunity to review the new feature as we would for any other new IT service.”

He posted his worries on the Canvas R1 Peers Listserv, an online forum for information services officials at research universities across the country, and he said many of his colleagues echoed his fears.

A spokesperson for Turnitin said that while there was “no option to turn off the feature” for the vast majority of users, the company had made an exception for “a select number of customers with unique needs or circumstances.”

Mindzak said the rise of generative AI has created a paranoid frenzy in higher ed that the technology sector has been eager to pounce on. He believes Originality’s swift rollout isn’t motivated by a desire to provide a timely solution to an urgent problem but rather by a company’s desire to fill a gaping market demand before any potential competitors beat them to the punch.

“Ed-tech opportunism is a big part of this,” he said. “Those companies are always looking for inroads into institutions and the creation of new markets.”

Rahul Kumar, also an assistant professor in Brock’s department of educational studies, said he worried that Turnitin’s grab for market share could have a negative effect on higher ed’s ability to thoughtfully deal with the issues raised by generative AI, especially for faculty members who are unfamiliar with the technology and eager for a simple solution.

“The fear is that professors who are opposed to AI detectors ideologically would throw up their hands and not use Turnitin at all, and then others will just say, ‘I don’t have to worry about it because Turnitin said it is good or not good,’” said Kumar, who has also worked as a software developer and systems administrator. “I don’t think it’s going to help practically, but it will give quite a bit of comfort to the universities and relief to some instructors. And that may pre-empt any innovation in curriculum to address the AI problem.”

Williams expressed similar concerns, citing Turnitin’s plans to make Originality available only to customers with a specific subscription after a year of a widely available free trial for its paying subscribers.

“Attempting to create instructor demand around this feature now, with a paywall looming in less than a year for many institutions, seems to be an inappropriate effort to market this functionality to instructors and create demand that would need to be addressed with expanded institutional contracts,” he wrote.

A spokesperson for Turnitin would not confirm the timeline of Originality’s full release, but Williams said the original plan was for the trial period to run out in January 2024.

Trusting Turnitin’s ‘Black Box’

Turnitin asserts that Originality can detect 97 percent of writings generated by ChatGPT and GPT-3. The company also says that its tool has a very low false positive rate—Eric Wang, Turnitin’s vice president of AI, told Market Insider that it was around 1 percent.

“Generative AI uses a mathematical model to identify and encode patterns of data and then predict which word should come next,” the Turnitin spokesperson wrote. “This is also why detection works, because this model creates a distinct statistical signature that looks very different from what humans tend to create in their writing. We can detect that difference.”

Kumar said he didn’t entirely trust Turnitin’s accuracy claims. After all, he said, there’s been no publicly available peer-reviewed research on Originality, and no insight into how accurate it will be when faced with more sophisticated AI than it was trained on. Meanwhile, GPTZero—another AI detector—recently determined that large chunks of the U.S. Constitution were likely written by an AI, an obvious false positive.

Williams said another issue he has with Originality is that, unlike Turnitin’s flagship plagiarism-detection tool, it offers no objective evidence for its results, only statistical probabilities. Originality may claim there is a 95 percent likelihood that a text was AI generated, for instance, but that algorithm’s sources wouldn’t be publicly available, whereas Turnitin’s plagiarism detector displays the sources in which it found similarities with a student’s text.

This lack of transparency, Williams said, has implications for Originality’s use in the classroom. Without objective evidence or student access, he said, the tool risks enabling a punitive culture based on speculative algorithms, a departure from the company’s messaging around its plagiarism detector.

“Historically, Turnitin has supported a message that these matching passages should not immediately be interpreted as plagiarism by an instructor … [and] this message has helped build a relationship of trust,” Williams wrote. “The nature of the new AI detection feature disrupts this relationship.”

A spokesperson for Turnitin said Originality should not be used punitively and emphasized that the tool is merely meant to “assist educators” in identifying AI-generated text or in developing lessons relating to it.

“All Turnitin integrity solutions are designed to highlight academic work that an educator may decide merits talking about with their student,” the spokesperson wrote. “No academic integrity solution should ever be used to singlehandedly decide matters of misconduct.”

Anna Mills, an English instructor at the College of Marin and a self-described “advocate for critical AI literacy,” said developing policies for how to deal with generative AI is a crucial first step for faculty to take before adopting AI detection tools like Originality. But most faculty have yet to take that step, according to a recent survey by Primary Research Group.

“That’s why it should really be something you have to opt in to,” Mills said. “Faculty who don’t really know what AI is or haven’t talked to their classes about it—I don’t think they’re ready to be using this kind of tool in grading students.”

She added that it’s especially important to include students in the policy-making process, like one professor at Boston University did with his Data, Society and Ethics class. The policy they designed together is now the departmentwide standard; Mills said this kind of collaborative approach can protect against arbitrary punishment and preserve trust in the classroom.

Mindzak believes the ideal solution, and the one most immune from obsolescence, would be to go completely analog—just a pen and paper, the old-fashioned way. No internet in class and no laptops, he said, means no use for generative AI or its detectors.

“Does higher ed even need ed tech? I would argue no; I might even say we’d be better without it,” he said. “But I know that’s not likely … It is kind of like a speeding train at this point. Maybe we should slow down and take a step back, but it’s hard to stop the inertia.”

Mills said generative AI hasn’t made a Luddite of her yet; she believes AI detectors can serve plenty of benevolent functions, not only for ensuring academic integrity but also as an aid for incorporating AI into the curriculum. Beyond the classroom, she added, they can have even more important benefits, like identifying AI-generated disinformation.

“The conversation in academia is too narrowly focused on academic dishonesty and not connecting it to the larger societal reasons for why we need this kind of software,” she said. “Rather than fighting about whether we support Turnitin, we should focus on the broader societal need for AI text identification and the importance of establishing this norm of transparency.”

Mills is hopeful that the final version of Originality will be both effective and transparent. What that would mean for her is that students could test their own results before submitting, peer-reviewed research would be conducted on its accuracy and, most importantly, more time would be allowed for faculty to discuss the issue thoughtfully with students.

For now, though, she’d prefer to opt out.