You have /5 articles left.

Sign up for a free account or log in.

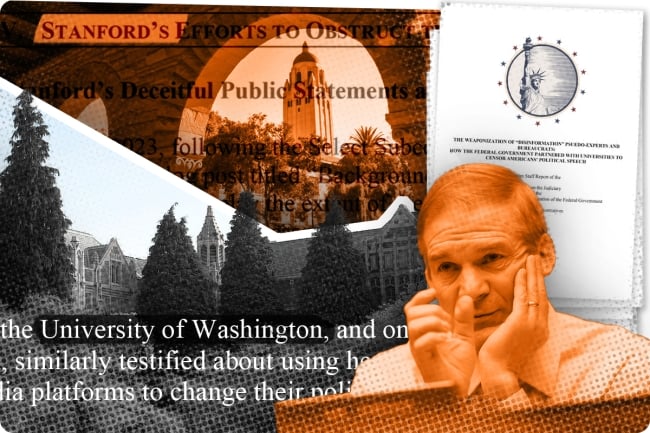

Jim Jordan, the U.S. House Judiciary Committee chairman, is investigating misinformation and disinformation researchers who have partnered with the federal government.

In the two months leading up to the November 2020 elections, and in the following weeks, the Election Integrity Partnership identified and tracked rampant online falsehoods and notified social media companies about them.

When they detected possible election-related mendacity, the partnership’s analysts, including Stanford University and University of Washington students supervised by academics at those institutions, created “tickets” that the partnership would sometimes share with social media companies. The tickets could be flagging a single online post or hundreds of URLs and entire narratives, the group said in a 2021 report.

The document said the partnership’s “objective explicitly excluded addressing comments made about candidates’ character or actions.” Instead, it was “focused narrowly on content intended to” interfere with voting “or delegitimize election results without evidence.”

Groups and government agencies that the partnership dubbed “trusted external stakeholders,” such as the NAACP and the State Department’s Global Engagement Center, could submit their own tickets, but the analysts sometimes deemed such submissions “out of scope.”

The partnership notified social media companies about, for example, claims that ballots had been invalidated because voters were forced to use Sharpie markers. A government partner, unnamed in the report, provided a written explanation to the partnership and social media companies for why the rumor was wrong.

The partnership said that, of the 4,832 URLs it reported to social media platforms, the companies “took action” on 35 percent—either by labeling them with warnings, removing them or, in Twitter’s case, “soft blocking” them by requiring users to bypass a warning to view them. (The report’s numbers on post removals may have been inflated, since they include users deleting their own posts, for whatever reason.) The report said it’s possible that additional content was “downranked” through platforms, too, making it appear lower in users’ social media feeds.

Several universities created misinformation and disinformation research centers in the wake of Russian attempts to interfere in the 2016 presidential election. Researchers tracked the spread of COVID-19 pandemic conspiracy theories and analyzed election-related falsehoods through the 2020 presidential race and Donald Trump’s subsequent denials of his loss in it.

But Republicans have latched on to the Election Integrity Partnership’s role in 2020, and academics both involved and uninvolved with that collaboration say they’ve faced pressure ranging from onerous open records requests to U.S. House investigations, lawsuits and even death threats. The Supreme Court has even taken up litigation that aims to, among other things, prevent federal government officials from urging, pressuring or inducing social media platforms to restrict access to content.

House Judiciary Committee chairman Jim Jordan, an Ohio Republican, summed up the conservatives’ objections in a post on X last month: “The federal government, disinformation ‘experts’ at universities, Big Tech, and others worked together through the Election Integrity Partnership to monitor & censor Americans’ speech,” he said.

This pressure on the research field has generated a narrative that these researchers’ scholarship has been silenced, such as a Washington Post headline reading “Misinformation research is buckling under GOP legal attacks.” But academics say they are continuing their studies. While an Election Integrity Partnership–like model may not be in place for the 2024 elections, continued research and advancing technology may provide other means to stem the real-time spread of lies.

Whether social media platforms such as X—formerly named Twitter and now owned by Elon Musk—will listen is another matter. X has laid off swaths of its content moderators, and Meta, which owns Facebook, has also reduced its ranks of moderators.

And House Republicans have subpoenaed documents from misinformation and disinformation (intentional misinformation) researchers who weren’t in the election partnership, meaning new models could also be threatened. An “interim staff report” from the Republicans’ investigation includes a broad condemnation of the field.

“The pseudoscience of disinformation is now—and has always been—nothing more than a political ruse most frequently targeted at communities and individuals holding views contrary to the prevailing narratives,” says the 104-page report, released last month by the Republican-controlled House Judiciary Committee and its Select Subcommittee on the Weaponization of the Federal Government. The document says the Election Integrity Partnership “provided a way for the federal government to launder its censorship activities in hopes of bypassing both the First Amendment and public scrutiny.”

However, some of the same researchers who say they see a general threat to their field are countering the narrative that they’ve been shut up.

“We don’t want folks to think that you can just bully an entire field into not existing anymore—like, that’s not how things work,” said Dannagal Young, a University of Delaware communication and political science professor who researches this area.

The misinformation and disinformation field involves more than the controversial practice of actively monitoring social media sites and directly reporting posts to those platforms—a practice where controversy increases, and invokes the First Amendment concerns currently being litigated, when the government is a partner.

Alex Abdo, litigation director at Columbia University’s Knight First Amendment Institute, said, “Researchers and the platforms have First Amendment rights of their own, and it’s important not to forget those researchers have a First Amendment right to study just about anything they choose.” He said their rights also allow them to notify platforms about disinformation and to push these companies to adopt and enforce policies against it.

Abdo isn’t a misinformation and disinformation researcher himself. But he said the House investigation “is so sweeping that it is having a chilling effect on constitutionally protected research.”

“I’m worried that we’re heading into the 2024 election cycle with a blindfold on,” he said.

Investigating, While Under Investigation

The Election Integrity Partnership’s higher education partners were the Stanford Internet Observatory and the University of Washington Center for an Informed Public (CIP). House Republicans have called on Kate Starbird, the Washington center’s director, to testify as part of their investigation, and some conservatives alleging censorship are also suing her personally in an Election Integrity Partnership–related case.

“The primary role of misinformation research is not to alert platforms to content, and the CIP has never seen that as core to our work,” Starbird said in an email.

“Today, this political pressure has frozen communications between researchers and platforms, as neither feel comfortable communicating with each other,” she said. However, she said her center “will continue our real-time analysis efforts and publish reports that platforms are welcome to use as they see fit. Our work is not stopping.”

Their work, however, no longer involves directly informing social media companies about falsehoods currently spreading online.

In October, the center’s website published a “rapid research report” on seven X accounts posting about the Israel-Hamas war and receiving far more views than traditional news sources. The report says Musk, the platform’s owner, has promoted these accounts, and the majority of them rarely cite their sources.

The center recommends that fellow researchers and reporters consider increasing their attention to X because “Our research suggests that X is changing rapidly, in ways not fully apparent even to people like us, who have followed the platform for years.”

The Stanford Internet Observatory is also still operating. Former director Alex Stamos, who remains affiliated with the entity, co-wrote a post on the observatory’s website in October about how the social network Mastodon’s decentralized nature can present opportunities for abuse, and providing resources for those running servers that are part of this newer social media platform to address this.

In response to requests for interviews on how the Stanford Internet Observatory will handle the upcoming election, the university instead emailed a comment.

“The Stanford Internet Observatory is continuing its critical research on the important problem of misinformation,” a spokeswoman wrote in the email. “The university is deeply concerned about ongoing efforts to chill freedom of inquiry and undermine legitimate and much needed academic research in the areas of misinformation—both at Stanford and across academia.”

The Washington Post reported that Stamos, at least, has said the congressional investigation has “been pretty successful, I think, in discouraging us from making it worthwhile for us to do a study in 2024.”

Mike Wagner, Helen Firstbrook Franklin Professor of Journalism and Mass Communication at the University of Wisconsin at Madison, received a letter from Jordan, the House Judiciary chair, in August requesting documents—followed by a subpoena in September demanding them. The August letter, which Wagner provided to Inside Higher Ed, noted that Course Correct, a project the University of Wisconsin at Madison is involved in, was funded by the National Science Foundation. The letter said the grant program was very similar “to efforts by other federal agencies to use grants to outsource censorship to third parties.”

Wagner said the project has been renamed “Chime,” “in order to better reflect what we are doing—which is chiming in to conversations online with verifiably accurate information.” He hopes this makes it “less likely that some folks out there will willfully misinterpret and mischaracterize what we are doing.”

He said the project aims “to build a tool that journalists, and potentially others,” including companies and governments, could use to find “networks” of misinformation or unverified information spreading on websites such as Reddit and X. Those using the tool could then “chime in” with information on those same networks.

“That’s not telling a social media company to throttle a post or engage in content moderation,” Wagner said.

“The fact that that kind of work is being characterized as information suppression or colluding with a presidential administration is as bonkers as it is dangerous,” he said.

Asked if Chime could be used to counter election mis- and disinformation in 2024, Wagner told Inside Higher Ed that he hopes “it will be of use during the 2024 election cycle, but we won’t rush to put something out before we are confident that our tool is working as intended, is easy to use and can refresh with new content in verifiably accurate ways. The task of figuring out how to label, at a very high level of accuracy, networks of low-quality information is a difficult problem to solve in real time, and we do not serve anyone well if we get it wrong.”

Jordan’s September follow-up letter to Wagner said, “It is necessary for Congress to gauge the extent to which the federal government or one of its proxies worked with or relied upon the University of Wisconsin in order to censor speech. The scope of the Committee’s investigation includes intermediaries who may or may not have had a full understanding of the government’s efforts and motivations.”

The attached subpoena requested, among other things, communications between the university and the federal government and companies about content moderation, and defines the “content” that Republicans were looking for evidence of being suppressed. The definition includes content relating to mail-in ballots, voter fraud allegations, the COVID-19 fatality rate, “issues related to gender identity and sexual orientation,” and conservative humor from The Babylon Bee.

“They define content in like a Mad Libs of conspiracy theories,” Wagner said. “So it’s like the Great Barrington Declaration [a statement by three epidemiologists opposing lockdowns], Hunter Biden’s laptop, critical race theory,” he said. “We literally had no communications about any of those things.”

A House Judiciary spokesperson wrote in an email to Inside Higher Ed that the “ongoing investigation centers on the federal government’s involvement in speech censorship, and the investigation’s purpose is to inform legislative solutions for how to protect free speech. The Committee sends letters only to entities with a connection to the federal government in the context of moderating speech online.”

Wagner said, “It sure feels like the goal is to stop this research. I’ll say that for our own team, it’s slowed us down, but it hasn’t shaken our resolve or changed our interests.”

‘Whac-A-Mole’

Jonathan Turley, Shapiro Chair for Public Interest Law at George Washington University, has spoken critically about Wagner’s project and other government-supported efforts to address misinformation, disinformation and malinformation—the latter of which Turley said is “the use of true facts in a way that is deemed dishonest or misleading.”

“The sweep of these three terms is quite broad,” he said. “Indeed, there’s very little definition.”

He said he doesn’t think these researchers have been silenced. Instead, he said critics like him are “losing ground by the day.”

“This is sort of a game of Whac-A-Mole,” he said. “Every time we address” one government-funded program, he said, “another grant pops up.”

“There’s been a hue and cry from these projects that they are feeling the pressure of this criticism, but many of us are objecting that they are incorporating academic institutions into an unprecedented censorship system,” he said. “You have a sort of triumvirate of government, corporate and academic groups that have become aligned in this effort, and I believe that that is worthy of criticism. It is certainly worthy of debate.”

Turley said, “None of us would ever object to an academic focusing” on this field, but “what we are objecting to are projects that are designed to play an active role in combating disinformation in alliance with the government and corporate offices.”

The state of misinformation research is complex—some initiatives are ending, but others are beginning, all under the continued specter of possible further investigations.

Darren Linvill, co-director of Clemson University’s Media Forensics Hub, testified this summer as part of the investigation.

“We have not heard from Congress again,” Linvill said. “You know, we’d had a pretty open and frank conversation with them, and I think that, from everything that I could tell, they learned a lot about the kind of work that we do and the focus of our research.”

“A lot of our work tends to be more apolitical,” he said. He did say Freedom of Information Act requests from people outside Congress—including both professional journalists and citizens who’ve been investigating this research—continue to take up time.

“A lot of the potential fallout from this we still don’t know,” he said. “I think that it has the potential to really affect funding sources in the future.”

He said his group raised $55,000 at a 2018 Clemson donor event to create spotthetroll.org, a website that teaches users, through a short quiz, how to better spot fake social media accounts in order to avoid being deceived. He said it’s still used around the world and he would like to create a new iteration.

“Five years ago I think these issues were less political than they are today, and I don’t know—if I were to pass that hat today—I don’t know if I’d get as excited of a response,” he said.

But Linvill said the Media Forensics Hub has added four faculty members and is adding postdoctoral workers, graduate students and staff, through a $3.8 million Knight Foundation grant matched by $3.8 million from Clemson. The foundation also partly funds the Knight First Amendment Institute at Columbia, which is providing legal support for misinformation and disinformation researchers who are facing litigation and investigations.

Young, the University of Delaware professor, who hasn’t been subpoenaed, said she thinks it’s fair to say the time researchers must spend “dealing with these legal challenges and making sure they have lawyers and, you know, dotting all the i’s and crossing all the t’s—that is certainly going to have an impact on the amount of bandwidth they have left over.”

In her own department, she said graduate students, many of them people of color and/or LGBTQ+, are looking at the price some others pay to do this research and deciding to focus elsewhere.

“The most glaring censorship of all is the chilling effect that these subpoenas and these crackdowns are having on what I consider the most important kind of speech, which is the production of knowledge,” she said. If younger scholars are dissuaded from this research, “we’ll never know the amazing ways that they would answer these questions.”

But she said the narrative that current researchers are “six feet under” only “helps the folks who are using this as an opportunity to advance their own fictions.” Researchers, she said, are “still in the game.”