You have /5 articles left.

Sign up for a free account or log in.

Some universities are creating their own versions of tools like ChatGPT in an effort to overcome security and ethical concerns.

Gorodenkoff/iStock/Getty Images

When ChatGPT debuted in November 2022, Ravi Pendse knew fast action was needed. While the University of Michigan formed an advisory group to explore ChatGPT’s impact on teaching and learning, Pendse, UMich’s chief information officer, took it further.

Months later, before the fall 2023 semester, the university launched U-M GPT, a homebuilt generative AI tool that now boasts between 14,000 to 16,000 daily users.

“A report is great, but if we could provide tools, that would be even better,” Pendse said, noting that Michigan is very concerned about equity. “U-M GPT is all free; we wanted to even the playing field.”

The University of Michigan is one of a small number of institutions that have created their own versions of ChatGPT for student and faculty use over the last year. Those include Harvard University, Washington University, the University of California, Irvine, and UC San Diego. The effort goes beyond jumping on the artificial intelligence (AI) bandwagon—for the universities, it’s a way to overcome concerns about equity, privacy and intellectual property rights.

We need to talk about ‘AI for good’ of course, but let’s talk about not creating the next version of the digital divide.”

Tom Andriola

Students can use OpenAI’s ChatGPT and similar tools for everything from writing assistance to answering homework questions. The newest version of ChatGPT costs $20 per month, while older versions remain free. The newer models have more up-to-date information, which could give students who can afford it a leg up.

That fee, no matter how small, creates a gap unfair to students, said Tom Andriola, UC Irvine’s chief digital officer.

“Do we think it’s right, in who we are as an organization, for some students to pay $20 a month to get access to the best [AI] models while others have access to lesser capabilities?” Andriola said. “Principally, it pushes us on an equity scale where AI has to be for all. We need to talk about ‘AI for good’ of course, but let’s talk about not creating the next version of the digital divide.”

UC Irvine publicly announced their own AI chatbot—dubbed ZotGPT—on Monday. Deployed in various capacities since October 2023, it remains in testing and is only available to staff and faculty. The tool can help them with everything from creating class syllabi to writing code.

Offering their own version of ChatGPT allows faculty and staff to use the technology without the concerns that come with OpenAI’s version, Andriola said.

“When we saw generative AI, we said, ‘We need to get people learning this as fast as possible, with as many people playing with this that we could,’” he said. “[ZotGPT] lets people overcome privacy concerns, intellectual property concerns, and gives them an opportunity of, ‘How can I use this to be a better version of myself tomorrow?’”

That issue of intellectual property has been a major concern and a driver behind universities creating their own AI tools. OpenAI has not been transparent in how it trains ChatGPT, leaving many worried about research and potential privacy violations.

Albert Lai, deputy faculty lead for digital transformation at Washington University, spearheaded the launch of “WashU GPT” last year.

WashU—along with UC Irvine and University of Michigan—built their tools using Microsoft’s Azure platform, which allows users to integrate the work into their institution’s applications. The platform uses open source software available for free. In contrast, proprietary platforms like OpenAI’s ChatGPT have an upfront fee.

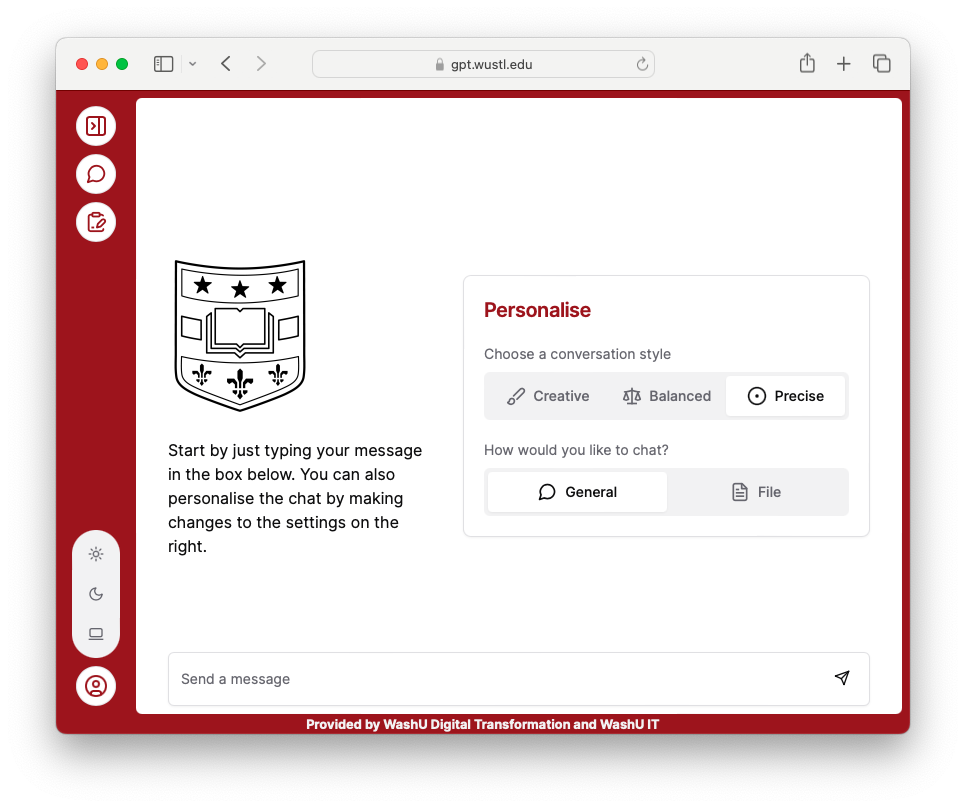

A look at WashU GPT, a version of Washington University’s own generative AI platform that promises more privacy and IP security than ChatGPT.

Provided/Washington University

There are some downsides when universities train their own models. Because a university’s GPT is based on the research, tests and lectures put in by an institution, it may not be as up-to-date as the commercial ChatGPT.

“But that’s a price we agreed to pay; we thought about privacy, versus what we’re willing to give up,” Lai said. “And we felt the value in maintaining privacy was higher in our community.”

To ensure privacy is kept within a university’s GPT, Lai encouraged other institutions to ensure any Microsoft institutional agreements include data protection for IP. UC Irvine and UMichigan also have agreements with Microsoft that any information put into their GPT models will stay within the university and not be publicly available.

“We’ve developed a platform on top of [Microsoft’s] foundational models to provide faculty comfort that their IP is protected,” Pendse said. “Any faculty member—including myself—would be very uncomfortable in putting a lecture and exams in an OpenAI model (such as ChatGPT) because then it’s out there for the world.”

Once you figure out the secret sauce, it’s pretty straightforward.”

Albert Lai

It remains to be seen whether more universities will build their own generative AI chatbots.

Consulting firm Ithaka S+R formed a 19-university task force in September dubbed “Making AI Generative for Higher Education” to further study the use and rise of generative AI. The task force members include Princeton University, Carnegie Mellon University and the University of Chicago.

Lai and others encourage university IT officials to continue experimenting with what is publicly available, which can eventually morph into their own versions of ChatGPT.

“I think more places do want to do it and most places haven’t figured out how to do it yet,” he said. “But frankly, in my opinion, once you figure out the magic sauce it’s pretty straightforward.”