You have /5 articles left.

Sign up for a free account or log in.

Morehouse College is launching animated, AI-powered bots that students can ask questions any time, anywhere.

Morehouse College/VictoryXR

For years, Ovell Hamilton has answered student calls and questions on his cellphone until 11 each night. But this coming semester, the Morehouse College history professor will be available in a new way: as a digitized, animated, AI-powered version of himself that can answer course questions, including showcasing historical maps or figures such as Abraham Lincoln.

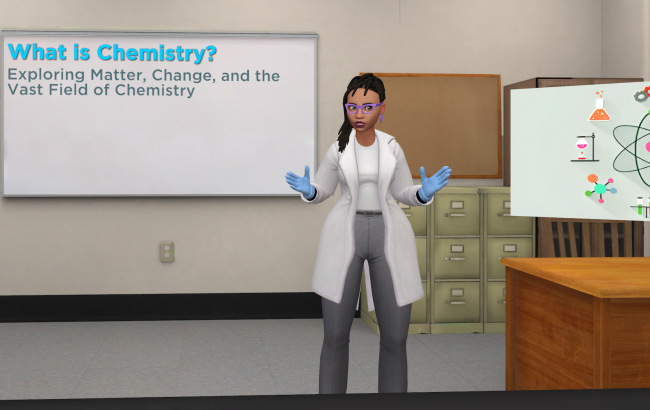

Morehouse is rolling out 3-D, artificial intelligence–powered bots this fall across five classrooms, including Hamilton’s, that will allow students to ask any question at any time.

It is not about replacing humans, said Muhsinah Morris, a senior assistant professor of education, who is spearheading the AI pilot. The goal, she said, is “to enhance students’ ability to get access to information that is cultivated in your classroom.”

AI bots in the classroom are not new—plenty of professors have built and deployed tools to help students get after-hours answers. The Georgia Institute of Technology was among the first, launching its “Jill Watson” bot in 2016.

But over the last two years, the rise of generative AI has added an array of new possibilities and concerns.

At Morehouse, the approach goes beyond typing questions into a chat bot.

At the historically Black Atlanta men’s liberal arts college, the new AI bots are trained from a professor’s lectures and course notes plus other material the faculty deem important. Students access the bot with a Google Chrome web browser, which displays a 3-D figure, or avatar, designed by the professor. Students can type in a question box or they can speak aloud—in their native language—and get a verbal response back in a way that mimics the classroom experience.

Keeping privacy issues in mind, Morris said any questions students ask will not be used to train any large language model.

“Sometimes you want to have the conversation; you want it to be warm, you want it to be like your instructor,” Morris said. “The conversational piece is really important for [students] to feel comfortable and seen regardless of the modality.”

Hamilton, chair of Morehouse’s Africana studies and history department, will be among the pilot users this fall. One of his main concerns is about faculty members eventually being replaced by the technology.

“I’m looking at it [thinking], ‘If AI is this advanced now, it’ll only advance further in the future,’” he said. “‘Are we phasing ourselves out of a job?’”

His concerns are echoed across the nation. The role of AI bots in the classroom has spurred speculation—and fear—in recent years. The discussion hit a fever pitch in April when Stan Sclaroff, dean of arts and sciences at Boston University, said AI could be used as a “tool” while teaching assistants were on strike. The following month, Arizona State University chief information officer Lev Gonick suggested the university may allow AI “augmentation” in the classroom to help with a large number of entry-level English courses.

Faculty unions, swift to condemn such statements, sprang into action, creating formal guidelines to safeguard against AI. Most recently, the National Education Association passed a policy statement last Thursday calling for educators to have a role in implementing and regulating the technology.

“Above all else, the needs of students and educators should drive AI’s use in education—and educators must be at the table to ensure these tools support effective teaching and learning for all students,” NEA president Becky Pringle said.

Morehouse does not have a union specific to the college; instead, faculty members are represented by the American Association of University Professors.

An AAUP representative did not immediately respond to a request for comment, but unions—including the NEA and the American Federation of Teachers—previously told Inside Higher Ed that institutions without a strong union should push for college administrators to codify AI policy language in an employee handbook.

Morehouse is also unusual in that the college does not have teaching assistants—so the AI avatars would not be replacing anyone. However, Morris did say she believes even if there were TAs, this technology would still be used to help—not replace—faculty.

“It doesn’t have to be an all-or-nothing with these tools,” she said. “The reason we’re doing it is for humanity to have an easier existence, to make and minimize the tasks we have so we’re not always working. I don’t see erasing the human component of computing, ever.”

And, despite Hamilton’s skepticism, he said he does believe Morehouse can use this tool for good.

“I still want to embrace the technology, and I think it’s all in the purpose of who’s using it,” he said. “It can be used and manipulated, but if we’re responsible—and we have been with the metaverse and virtual reality—I don’t think it’ll be any different with AI.”