You have /5 articles left.

Sign up for a free account or log in.

“People are expecting a wave” of AI business innovation, Vinod Khosla, co-founder Sun Microsystems and founder and chairman of Khosla Ventures, said. “What’s going to hit us is a tsunami.”

zhuweiyi49/Getty Images

When Brian Halligan and Dharmesh Shah were students at the Massachusetts Institute of Technology in the early 2000s, they were given 60 minutes to pitch one of their professors about their start-up idea—a software platform that would help companies market and sell more effectively. But the professor was “loquacious” and spoke for 59 of the 60 minutes, Halligan said at an exclusive gathering focused on the business of artificial intelligence held at MIT last week. In the remaining one minute, Halligan and Shah delivered their pitch—and were delighted when the professor agreed to provide substantial funding for their idea.

But the fledgling start-up founders still needed more money to make their vision a reality. So, they turned to their MIT classmates, who were not themselves rich, though their parents were, according to Halligan.

“We raised $900,000 from our classmates’ parents,” Halligan said as the audience erupted in laughter. “And we needed customers, and nine of our first 10 customers were [MIT] Sloan grads. And we needed some employees, and nine of our first 10 employees were Sloan grads.” Today, their company, HubSpot, holds approximately one-third of the market share in marketing automation solutions.

The MIT gathering drew a critical mass of venture capitalists together with start-up founders—all focused on artificial intelligence. (Many of the latter were affiliated with MIT.) The opportunity was “like catching fish in a barrel,” said John Werner, the energetic host of the event, managing director at Link Ventures, a venture capital firm, and former head of innovation and new ventures at the MIT Media Lab’s Camera Culture Group.

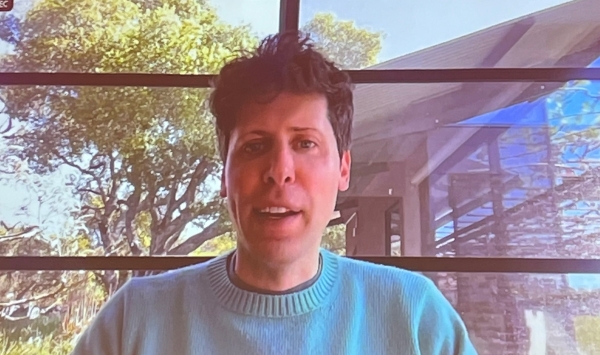

Faculty, staff and administrators should brace themselves for an oncoming AI “tsunami” in business sectors that include education, according to the star-studded academic and industry leaders who spoke at the MIT gathering. OpenAI CEO and co-founder Sam Altman, Sun Microsystems co-founder Vinod Khosla and Sandy Pentland, co-leader of the World Economic Forum Big Data and Personal Data initiatives, among others, shared insights about market opportunities, risk mitigation, and decision making related to ChatGPT and other generative-AI tools.

But some emerging entrepreneurs who attended shared concerns that differed, in some cases, from the big-tech establishment, including expressed wishes for an increased emphasis on privacy and inclusivity.

The AI-in-education market is expected to grow from approximately $2 billion in 2022 to more than $25 billion in 2030, with North America accounting for the largest share.

Artificial intelligence is expected to begin outperforming humans on most cognitive tasks this century, according to experts. As such, the Future of Life Institute recently published a letter calling on AI labs to immediately pause for at least six months the training of AI systems more powerful than GPT-4. The letter was signed by Turing Award recipient and “godfather of AI” Yoshua Bengio, leading AI researcher Gary Marcus, Apple co-founder Steve Wozniak and 26,000 others. Some criticized the institute for accepting funding from the Musk Foundation (Elon Musk also signed the letter) and the letter for prioritizing hypothetical apocalyptic scenarios over present-day concerns related to AI, such as racist and sexist bias.

During the MIT gathering, Altman said in a Zoom call that the letter was “missing most technical nuance about where we need the pause.”

“I think moving with caution and an increasing rigor for safety issues is really important,” Altman continued. “The letter, I don’t think, is the optimal way to address it.”

Altman also suggested that, moving forward, bigger language models may not always be better. This caught many attendees by surprise, given recent large language model parameter counts—a measure of the number of connections between artificial neurons. For example, the chat bot GPT-2, announced in 2019, relied on 1.5 billion parameters to answer questions in natural language. Then, GPT-3, announced in 2020, had a staggering 175 billion parameters. (ChatGPT is powered by GPT-3.5.) Though OpenAI did not disclose the number of GPT-4’s parameters when it entered the public consciousness this year, some, including many at the MIT gathering, speculated that the number exceeds 100 trillion.

“I think we’re at the end of the era where it’s gonna be these giant models, and we’ll make them better in other ways,” Altman said.

D'Agostino for Inside Higher Ed

The following edited, condensed excerpts from the MIT gathering suggest that the business sector expects to lead the AI transformation, that many industry leaders fear missing out, even as they acknowledge the possibility for catastrophic failure, and that humans and machines define “trust” differently.

Industry Expects to Lead

Henrick Landgren, partner at EQT Ventures who earlier built the analytics team and contributed to all major initiatives at Spotify

During the years that I’ve been around in the tech industry, I’ve seen a lot of bubbles come and go … We’ve seen great specific developments popping up in [education, health care and environmental] sectors, but we have not yet seen the spring of these sectors … Entrepreneurs will lead this transformation. Entrepreneurs are the driving force for sparking innovation.

Big Tech Plans to Accelerate Capability

Sam Altman, CEO and co-founder of OpenAI

Maybe parameter count will trend up for sure. But this reminds me a lot of the gigahertz race in chips in the 1990s and 2000s where everybody was trying to point to a big number.

It’s important that we keep the focus on rapidly increasing capability … We’re not here to jerk ourselves off on parameter count.

Business Leaders Have FOMO

Delphine Nain Zurkiya, senior partner at McKinsey

Up until five years ago, it was really the CIO, if they existed, who woke up in the morning and thought about [AI]. But the timeline we had as we helped them with the strategy was a 10-year timeline. We had three years to think about it, three years to experiment and three years to see if you could scale it.

This has completely changed. FOMO [fear of missing out] is the right word. A lot of our executives come to us to ask, “Is this real?” It’s no longer [only] a CIO conversation.

Lan Guan, senior managing director at Accenture

The main theme I’m hearing from the boardroom is this accelerated timeline—the urgency for all of my clients to start embarking on their generative-AI journey. ChatGPT did a great job catalyzing everything … We are in these conversations every day … I’ve been consulting for 20-plus years. This is a kind of urgency I have never seen before.

The money is there … This is prime time for start-ups. I don’t need to cite the number of venture capitalists jumping into this space. I’m probably having 20 conversations every day with start-ups.

This is the data liberation movement … This is a complete paradigm shift … Now all of my clients, enterprise-level organizations, have almost an obligation to get their data ready, to bring their proprietary data into the large language model … so the power of the models can be activated.

Education as a Sector Has Changed

Stephen Wolfram, founder and CEO of Wolfram Research and creator of Mathematica, Wolfram|Alpha and Wolfram Language

ChatGPT is pretty decent at making analogies, of noticing that something over here is like something over there. I’m pretty big on making grand analogies, too—for example, on the relationship between gravitation theory and metamathematics.

One of the things that may very well happen is that, as ChatGPT-like things grind down further on the knowledge we have put out there in the world, it will notice more and more of these kinds of analogies. It will notice that the pattern of this is sort of like the pattern of that.

It’s already noticed some things that we should be embarrassed we didn’t notice before … We know that there is a syntactic grammar of language, that we put together nouns and verbs and other parts of speech. And we know there is a logic that defines how language can be made meaningful. But there’s more. There’s another layer of how you put together sentences, which could plausibly be meaningful, and it discovered that. We should have done that, but we didn’t.

Two things will happen in education. One is that the set of people who will be able to use computation will be a lot larger. So, those in the literature department will be able to do computational literature. That’s the good news.

The bad news is all the people who are teaching low-level programming … Should you learn to program? Probably not. You should learn how to think about things computationally. You should learn how to formulate things so that there’s a chance that you can make a systematic computational approach to it.

Industry Doesn’t Rule Out Catastrophic Failures

Alexander Amini, founder, Themis AI

“Trust” is a loaded term that people have trouble wrapping their head around. What does it mean for an AI model to be trustworthy, especially from the point of view of enterprise companies?

In practice having a 100 percent trustworthy model is very far away, even with today’s advances. Ninety percent, on average, is what we’re seeing in the lab. What trustworthy [should mean] is this idea that, if the model says that it’s 90 percent accurate in the lab, then if you deploy it, nine out of 10, hopefully, will be accurate.

In practice, this is not at all the way AI models work. If it says 90 percent accurate in the lab, what it really means is that it’s probably going to be 99 to 100 percent accurate most of the time, but then 0 percent accurate in some catastrophic failures that come all of a sudden … They happen very quickly. They’re hard to predict. That’s the real challenge behind AI, that these errors and failures that come with it are very unexpected.

How can we build AI models and take existing AI models that are already in deployment and make them risk aware? That means, transform them so that they can understand and convey to us as humans when they’re going to fail—even before they fail.

Errors are acceptable if we can be warned before. But that’s a huge challenge for companies to engineer models in that way, because today’s AI technologies don’t behave like that. They’re very opaque. They don’t tell us when they’re going to fail before they fail.

Some Business Leaders Are Concerned

Vaikkunth Mugunthan, CEO DynamoFL, a company that delivers regulation-compliant AI

The most important thing right now is privacy.

Some Are Less Concerned

Dave Blundin, serial entrepreneur and co-founder and managing partner of Link Ventures

I’m optimistic. Most of the dystopians think that this thing is going to somehow become conscious and go Terminator on us. These things don’t do that. They’re incredibly powerful in ways that humans aren’t powerful. They’re not trying to become human. They’re doing whatever they’re directed to do.

Either Way, Business Expects AI to Drive Massive Change

Marc Tarpenning, co-founder of Tesla and venture partner at Spero Ventures

In computer science, getting to that 90 percent place [for autonomous machines] is relatively easy in retrospect. It’s that last 10 percent that is a killer.

[Years ago, people spoke of] that whole Silicon Valley hype machine. “The internet? It’s stupid. Nobody is going to really use it. It is just smoke and mirrors from Silicon Valley.” But of course, that was the beginning of the internet changing everything that we do.

We’re in a similar moment right now with AI … but I’m not super into having AI take over entirely yet.

Vinod Khosla, co-founder, Sun Microsystems, and founder and chairman of Khosla Ventures

People are expecting a wave [of AI business innovation]. What’s going to hit us is a tsunami.

[In response to the question, “Have we had a tsunami in your lifetime?”] Not in business. The largest transition we’ve seen was in agricultural employment, which went from 50 percent of U.S. employment in the year 1900 to a few percent by the 1970s … We had multiple generations to adjust and relocate people to the cities. This will be so much faster. We will have a hard time adjusting.

An Academic Warns of Autonomous AI Risks

Sandy Pentland, co-leader of the World Economic Forum big data and personal data initiatives, Toshiba Professor of Media Arts and Sciences and professor of information technology in the MIT Sloan School of Management, director of the MIT Human Dynamics Laboratory and the MIT Media Lab Entrepreneurship Program, and one of Forbes’ seven most powerful data scientists in the world.

If you try to do anything [with AI] other than augment humans, you’re taking on a liability … You have to be ready for a lawsuit. I’ve worked with a lot of regulators all around the world, and they’re coming for you.

If you’re helping people … and leaving that human decision—the liability—in the hands of a person, you’ll be OK … What you have to do is keep track … When somebody comes for you, and they will, you can say, “this is what we did, and it affected women this way, and Blacks this way, and kids this way.” You have to keep track of that because you’ll have to defend yourself.

Emerging Entrepreneurs’ Views May Differ from the Establishment

In an email following the event, Werner shared feedback he received from attendees, which included, “You are creating AI Nation, and Cambridge is the capital.” “The lineup was beyond fantastic.” “Astonishing event. The attendees were gushing!” “Definitely close to Oscar level.” “There is a good chance I will remember my life as it was before and after Reverse Pi Day, 2023 [a math joke referring to the April 13 date of the gathering].” “Simply extraordinary and great privilege to be there.” “My brain will never be the same.” “So grateful. “AWESOME.” “The guys looking to hire were pumped to speak with good candidates and got great leads.” “This event is on the fast track to become one of the most important AI events ever.” “I learned that none of us are currently being ambitious enough.”

But the first few individuals with whom Inside Higher Ed spoke at the gathering’s afterparty offered some contrasting views.

Anonymous, founder of an AI start-up focused on helping businesses leverage the benefits of AI, who asked for confidentiality out of concern that negative feedback about the gathering might jeopardize funding opportunities from venture capitalists in attendance.

There were a few people [at MIT’s gathering] who were talking about privacy, but not many. Some tried to bring it up but were shot down, or there was a vague answer.

I’m not worried about a dystopian or Terminator kind of world in next five or 10 years. I’m worried about how difficult it is for people to trust anything on the internet anymore. We are seeing effects of this right now.

Mercy Chado, a research associate focused on genomics and bioinformatics in the therapeutics lab of the Cystic Fibrosis Foundation

There was a lot of, “We have this new technology, and it’s going to change the world.” But they didn’t talk enough about privacy.

Vincent McPhillip, founder and CEO of Knomad, a start-up focused on helping people find flexible, meaningful work

The conference was excellent … A lot of us are experiencing this [moment with AI] individually behind our computers, and the conference allowed us to come together and share this moment together.

As a person of color, it’s really hard for me not to see other Black and brown people in the audience. There are some here, but not nearly enough, particularly when you look at the impact that this technology is likely to have on our community. We need to make more effort in making sure that we have more diverse representation so that this next wave doesn’t become one that leaves us behind.

There’s a conference going on in parallel to this one. It’s a local organization that supports Black professionals in the Boston area. It started yesterday, and I’ll be there tomorrow. When I was signing up for that conference, there wasn’t even a technology field for me to choose. Then I come [to MIT’s conference], and I’m swimming in AI. It’s such a weird dichotomy.

I was complaining to my brother yesterday that I’m really hoping there will be a day when I don’t have to continue straddling these two worlds, literally shuttling back and forth between them. That’s the yearning that I feel when I look up and around in rooms like this.